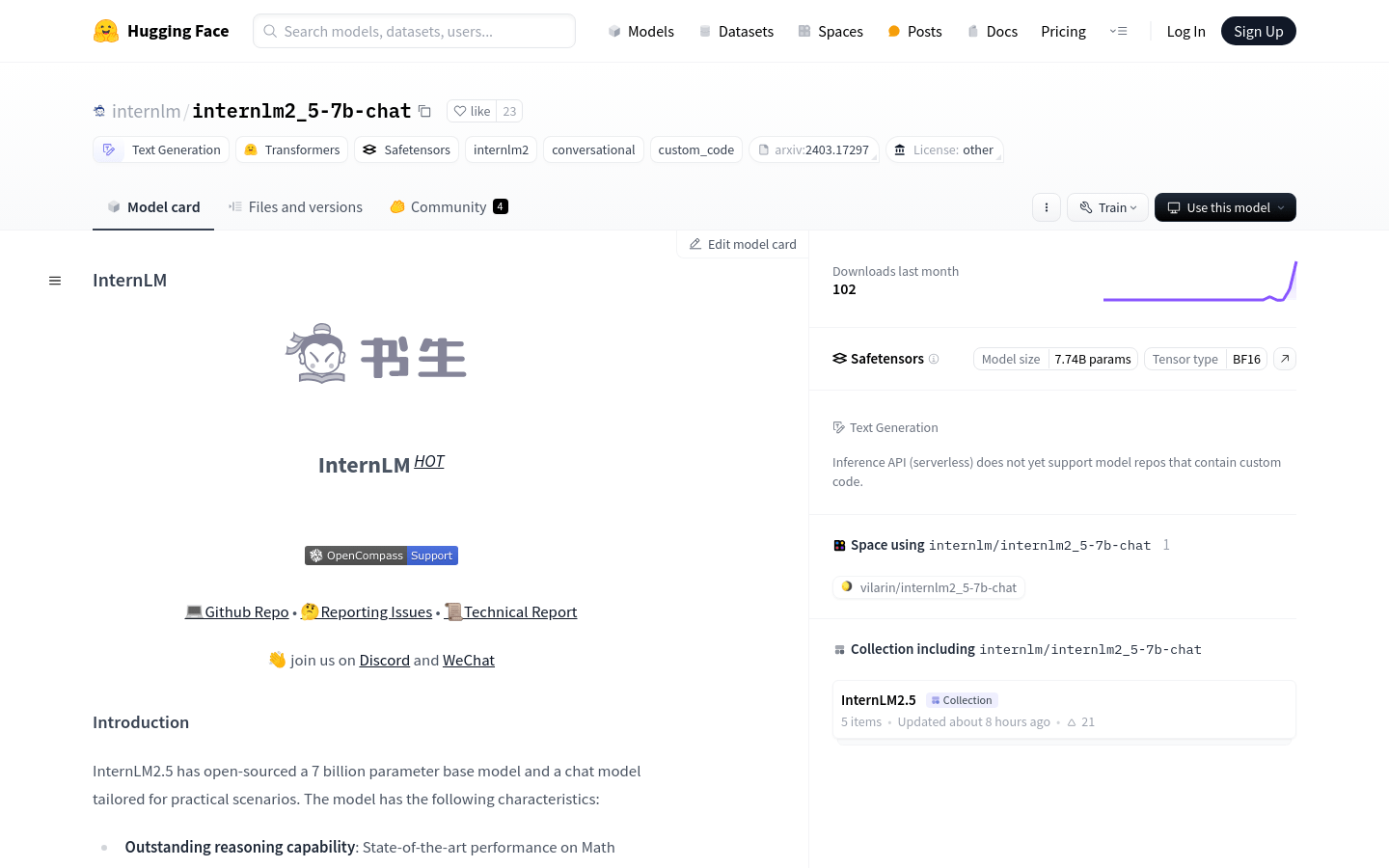

InternLM2.5-7B-Chat

A high-performance dialogue model with 7 billion parameters

- Excellent performance in mathematical reasoning, surpassing models of the same magnitude.

- Supports 1M ultra long contextual window, suitable for long text processing.

- Capable of collecting information from multiple web pages for analysis and reasoning.

- Ability to understand instructions, screen tools, and reflect on results.

- Support model deployment and API services through LMDeploy and vLLM.

- The code follows the Apache-2.0 protocol open source, and the model weights are fully open to academic research.

Product Details

InternLM2.5-7B Chat is an open-source 700 million parameter Chinese dialogue model designed specifically for practical scenarios, with excellent reasoning capabilities that surpass models such as Llama3 and Gemma2-9B in mathematical reasoning. Support collecting information from hundreds of web pages for analysis and reasoning, with powerful tool calling capabilities, supporting 1M ultra long contextual windows, suitable for intelligent agent construction for long text processing and complex tasks.