FunAudioLLM

A Basic Model for Speech Understanding and Generation in Natural Interaction

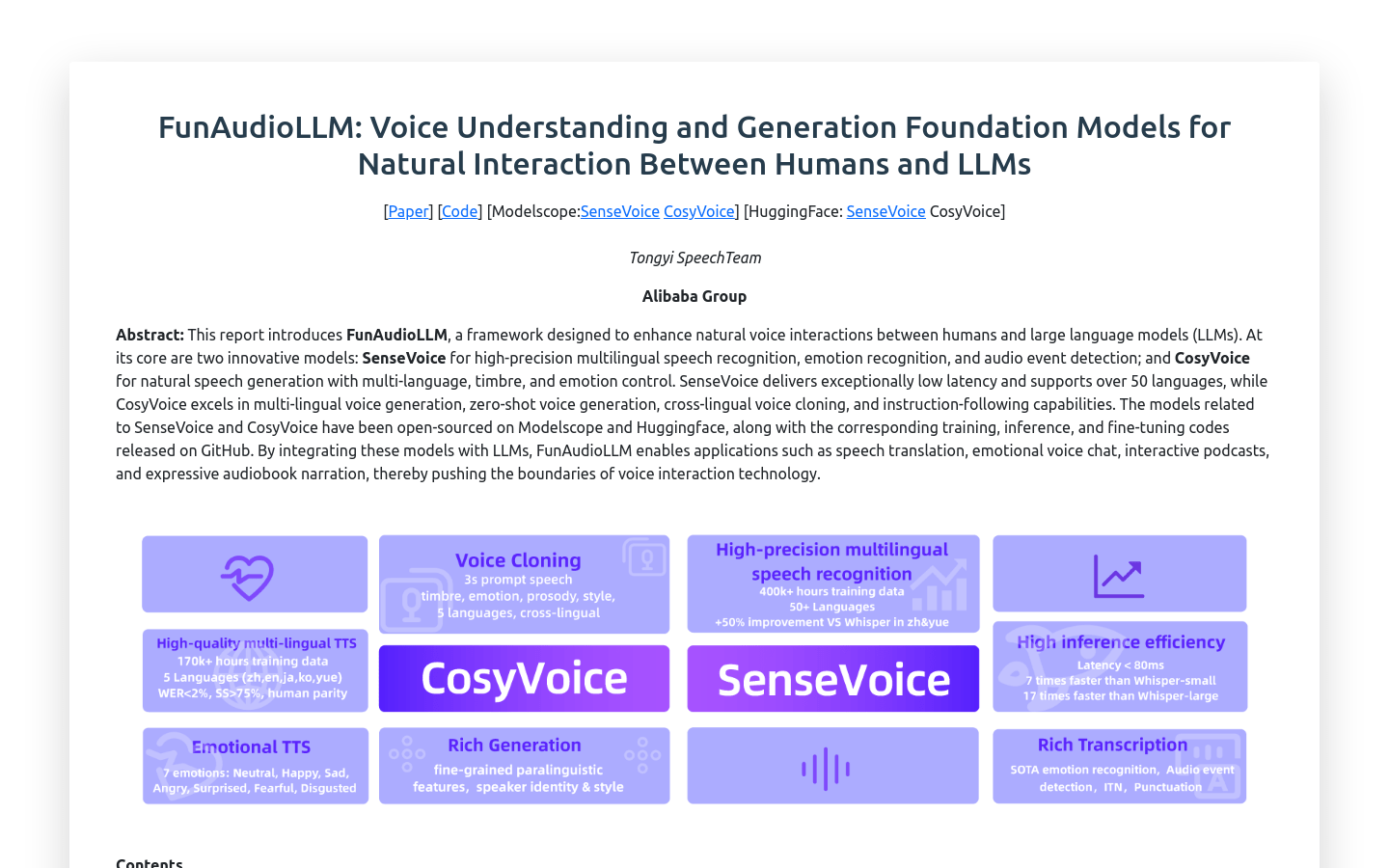

- High precision multilingual speech recognition: supports speech recognition for over 50 languages with extremely low latency.

- Emotion recognition: capable of recognizing emotions in speech and enhancing interactive experience.

- Audio event detection: Identify specific events in audio, such as music, applause, laughter, etc.

- Natural speech generation: The CosyVoice model can generate speech with natural fluency and multilingual support.

- Zero sample context generation: Generate speech with specific context without additional training.

- Cross language speech cloning: capable of replicating speech styles from different languages.

- Instruction following ability: Generate corresponding style of speech based on user instructions.

Product Details

FunAudioLLM is a framework designed to enhance natural speech interaction between humans and Large Language Models (LLMs). It includes two innovative models: SenseVoice is responsible for high-precision multilingual speech recognition, emotion recognition, and audio event detection; CosyVoice is responsible for natural speech generation, supporting multiple languages, timbre, and emotion control. SenseVoice supports over 50 languages and has extremely low latency; CosyVoice excels in multilingual speech generation, zero sample context generation, cross language speech cloning, and instruction following capabilities. The relevant models have been open sourced on Modelscope and Huggingface, and the corresponding training, inference, and fine-tuning code has been released on GitHub.