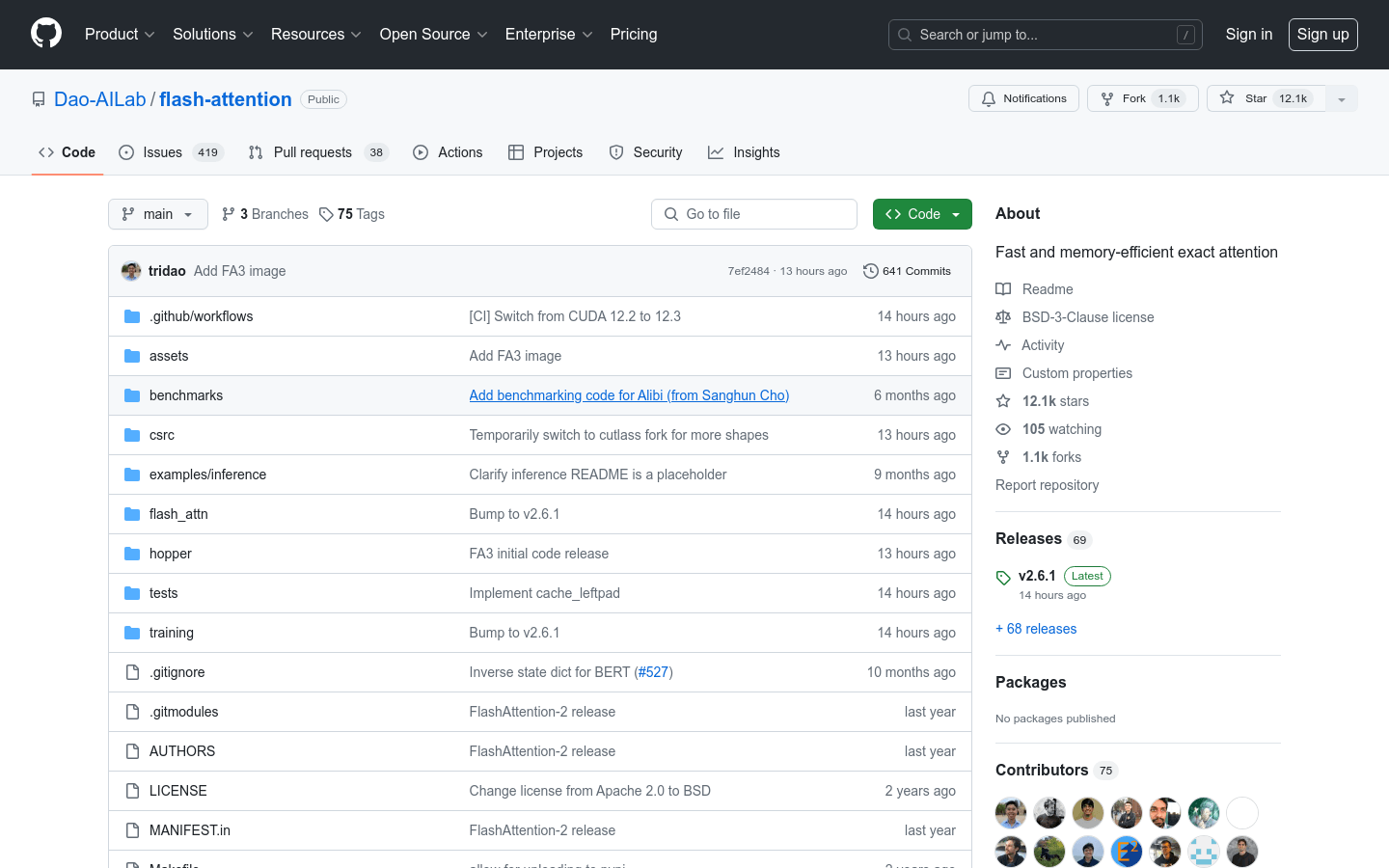

FlashAttention

Fast and memory efficient precise attention mechanism

- Supports multiple GPU architectures, including Ampere, Ada, and Hopper.

- Provide support for data types fp16 and bf16, optimized for specific GPU architectures.

- Implemented expandable header dimensions, supporting up to 256.

- Support causal attention and non causal attention to adapt to different model requirements.

- Provides simplified API interfaces for easy integration and use.

- Support sliding window local attention mechanism, suitable for scenes that require local contextual information.

Product Details

FlashAttention is an open-source attention mechanism library designed specifically for Transformer models in deep learning to improve computational efficiency and memory usage. It optimizes attention computation through IO aware methods, reducing memory usage while maintaining accurate computation results. FlashAttention-2 further improves parallelism and workload allocation, while FlashAttention-3 is optimized for Hopper GPU and supports FP16 and BF16 data types.