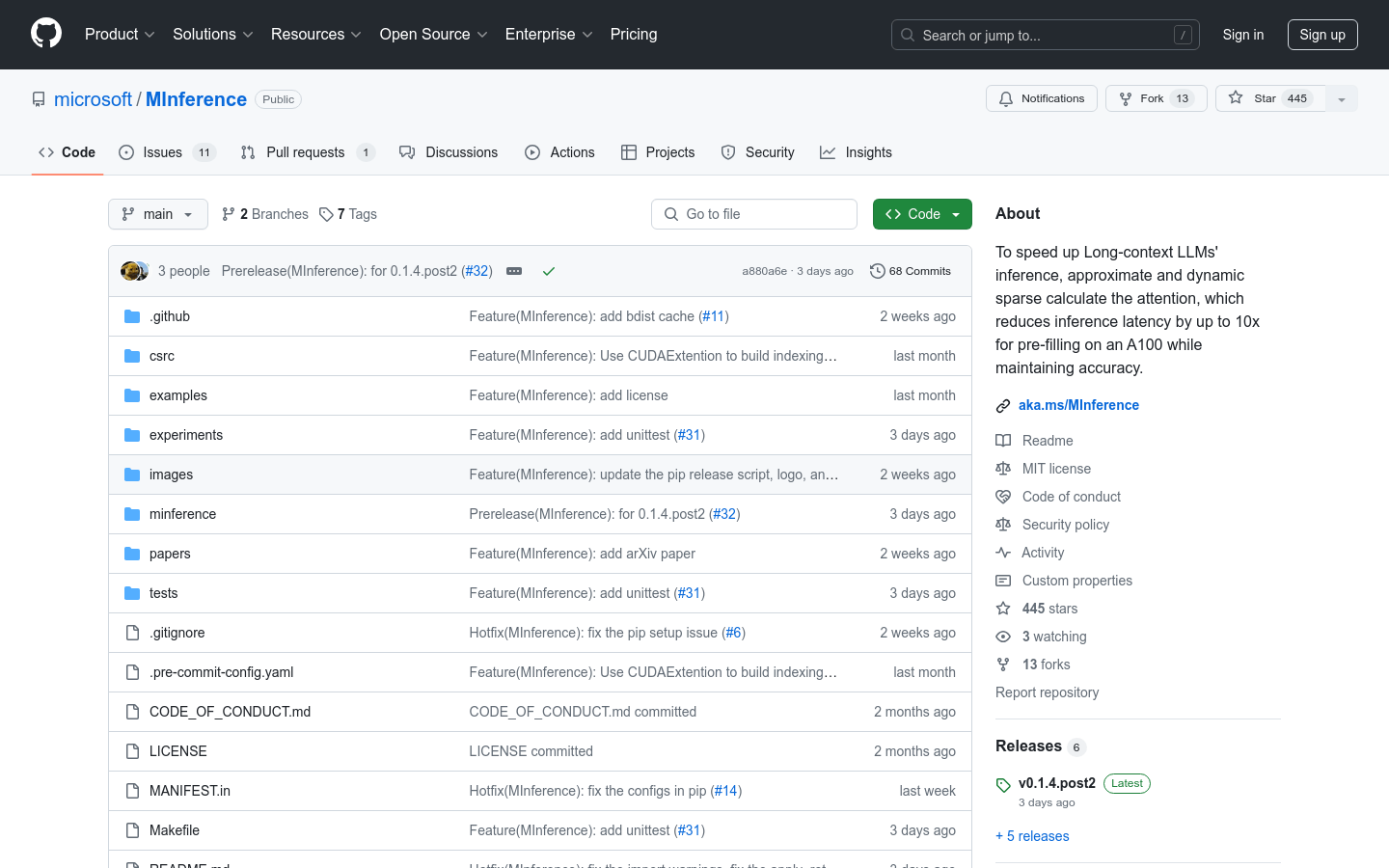

MInference

Accelerate the inference process of large language models with long context

- Dynamic Sparse Attention Pattern Recognition: Determine the sparse pattern to which each attention head belongs through analysis.

- Online Sparse Index Approximation: Dynamically computing attention using the optimal custom kernel.

- Supports multiple decoding types of large language models, including LLaMA style models and Phi models.

- Simplify the installation process: Quickly install MIconference using the pip command.

- Provide rich documentation and examples to help users quickly get started and apply MInference.

- Continuous updates and community support: Adapt to more models and continuously optimize performance.

Product Details

MIference is an inference acceleration framework for long context large language models (LLMs). It utilizes the dynamic sparsity feature of LLMs attention mechanism, significantly improving the speed of pre filling through static pattern recognition and online sparse index approximation calculation, achieving a 10 fold acceleration in processing 1M context on a single A100 GPU while maintaining the accuracy of inference.