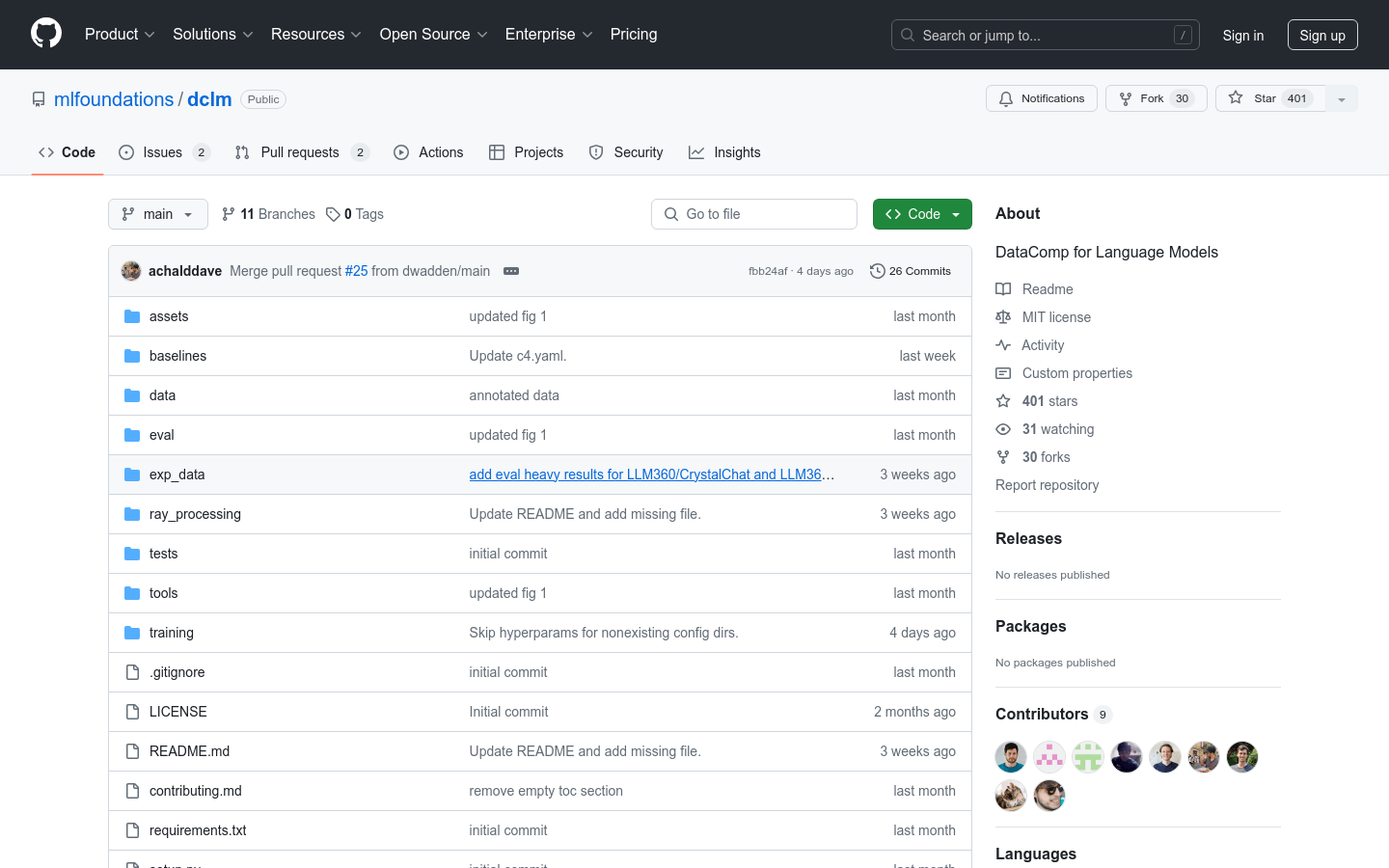

DCLM

A comprehensive framework for constructing and training large-scale language models

- Provide over 300T unfiltered CommonCrawl corpus

- Provide effective pre training recipes based on the open_1m framework

- Provide over 50 evaluation methods to assess model performance

- Support different computational scales for models ranging from 411M to 7B parameters

- Allow researchers to experiment with different strategies for constructing datasets

- Improve model performance by optimizing dataset design

Product Details

DataMp LM (DCLM) is a comprehensive framework designed for building and training large language models (LLMs), providing standardized corpora, efficient pre training formulas based on the open_1m framework, and over 50 evaluation methods. DCLM supports researchers to experiment with different dataset construction strategies at different computational scales, ranging from 411M to 7B parameter models. DCLM has significantly improved model performance through optimized dataset design and has facilitated the creation of multiple high-quality datasets that outperform all open datasets at different scales.