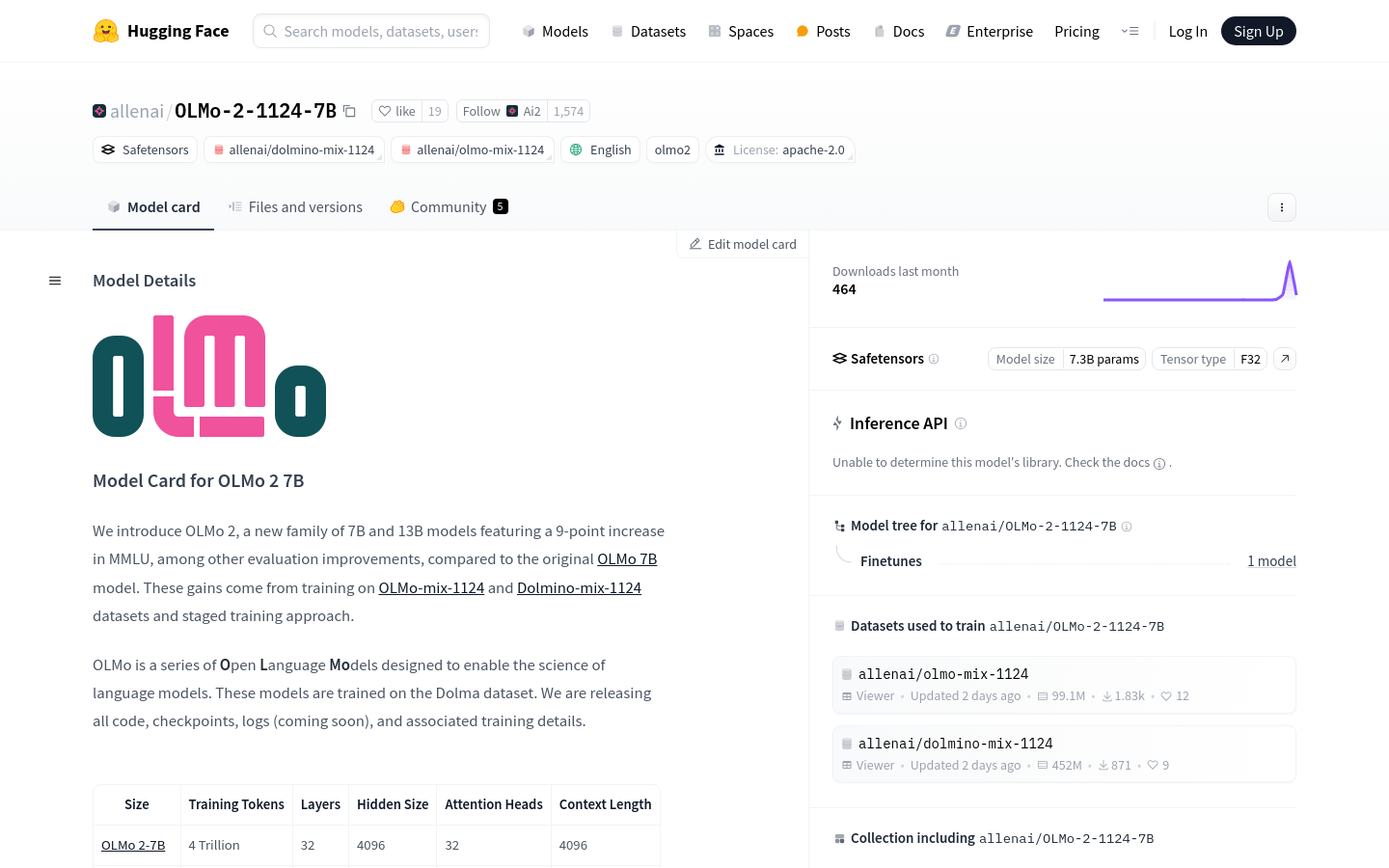

OLMo 2 7B

A large-scale language model with 7B parameters to enhance natural language processing capabilities

0

- Support multiple natural language processing tasks, such as text generation, question answering, text classification, etc

- Trained on large-scale datasets with powerful language comprehension and generation capabilities

- Open source model, convenient for researchers and developers to conduct secondary development and fine-tuning

- Provide pre trained and fine tuned models to meet the needs of different application scenarios

- Support the use of Hugging Face's Transformers library for model loading and usage

- Model quantification support improves the operational efficiency of models on hardware

- Provide detailed model usage documentation and community support to facilitate user learning and communication

Product Details

OLMo 2 7B is a large-scale language model with 7B parameters developed by Allen Institute for AI (Ai2), which has demonstrated excellent performance in multiple natural language processing tasks. This model can understand and generate natural language through training on large-scale datasets, supporting research and applications related to multiple language models. The main advantages of OLMo 27B include its large-scale parameter count, which enables the model to capture more subtle language features, and its open-source nature, which promotes further research and application in academia and industry.