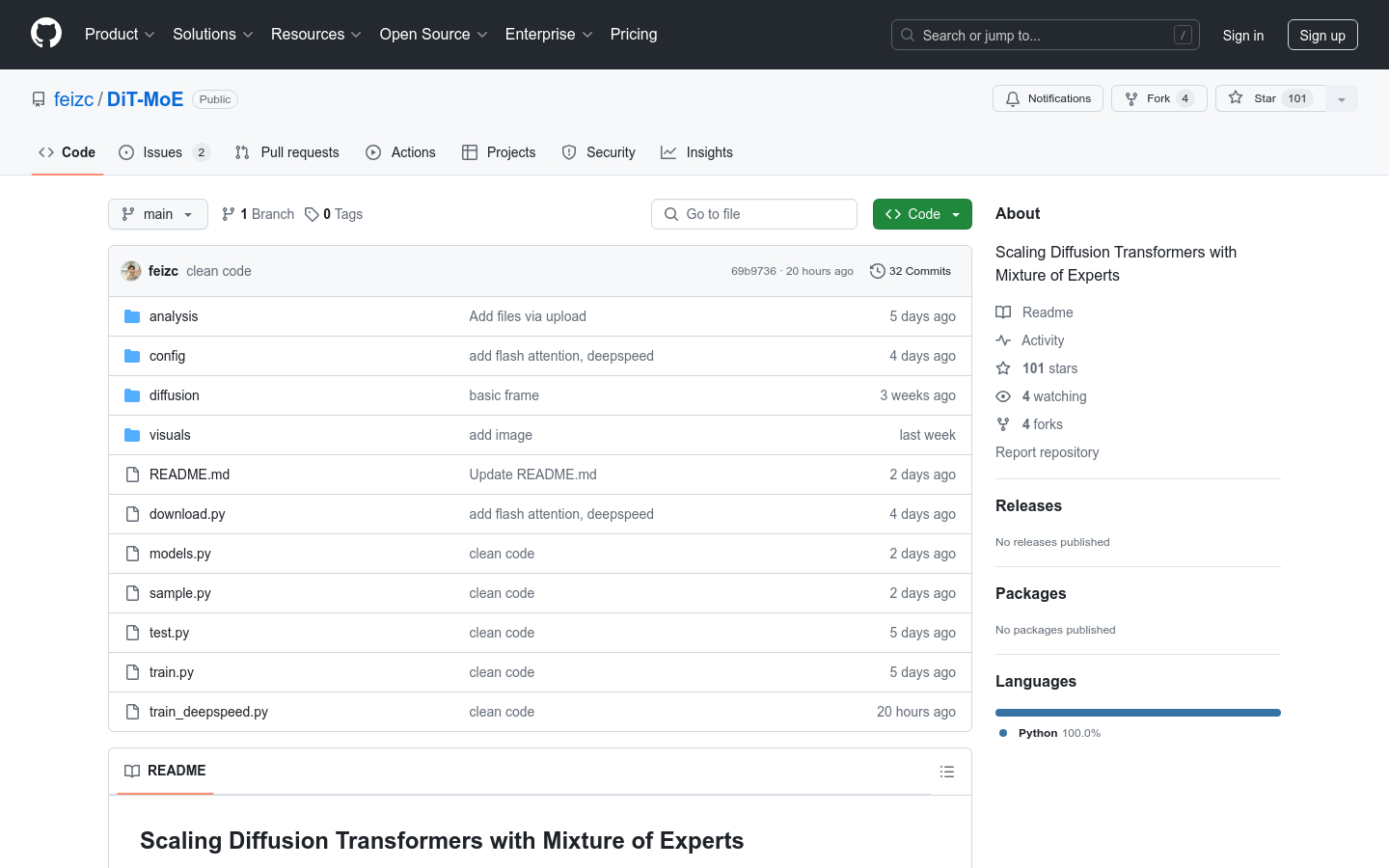

DiT-MoE

Large scale parameter diffusion transformer model

- Provide PyTorch model definition

- Includes pre trained weights

- Support training and sampling code

- Support large-scale parameter expansion

- Optimized reasoning ability

- Provide expert routing analysis tools

- Contains synthesis data generation script

Product Details

DiT MoE is a diffusion transformer model implemented using PyTorch, capable of scaling up to 16 billion parameters and exhibiting highly optimized inference capabilities while competing with dense networks. It represents the cutting-edge technology in the field of deep learning for processing large-scale datasets and has important research and application value.