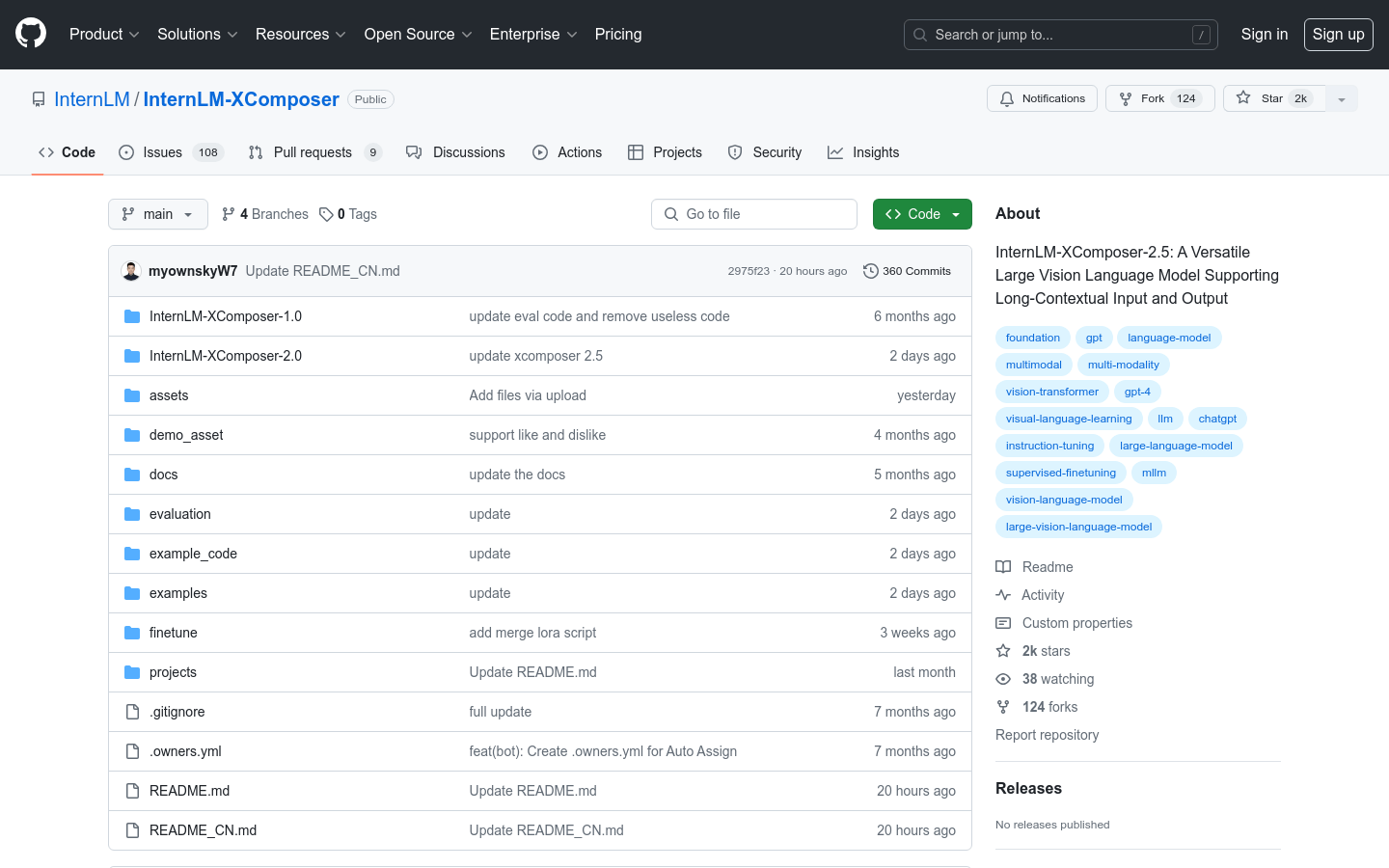

InternLM-XComposer-2.5

A multifunctional large-scale visual language model

- Long context input and output capability, supporting 96K long context processing

- Ultra high resolution image understanding, supporting high-resolution images of any scale

- Fine grained video understanding, viewing videos as ultra-high resolution composite images composed of tens to hundreds of frames

- Multi round and multi image dialogue support, achieving natural human-machine multi round dialogue

- Web page production, writing source code (HTML, CSS, and JavaScript) based on text and image instructions

- Write high-quality graphic and textual articles, utilizing Chain of Thoght and Direct Preference Optimization techniques to improve content quality

- Performed outstandingly in 28 benchmark tests, surpassing or approaching existing state-of-the-art open source models

Product Details

InternLM-XComposer-2.5 is a multifunctional large-scale visual language model that supports long context input and output. It performs well in various text image understanding and creation applications, achieving a level comparable to GPT-4V, but only using 7B LLM backend. This model is trained on 24K interleaved image text context and can seamlessly scale to 96K long context, extrapolated through RoPE. This long contextual ability makes it outstanding in tasks that require a wide range of input and output contexts. In addition, it also supports features such as ultra-high resolution understanding, fine-grained video understanding, multi turn multi image dialogue, web page creation, and writing high-quality graphic and textual articles.