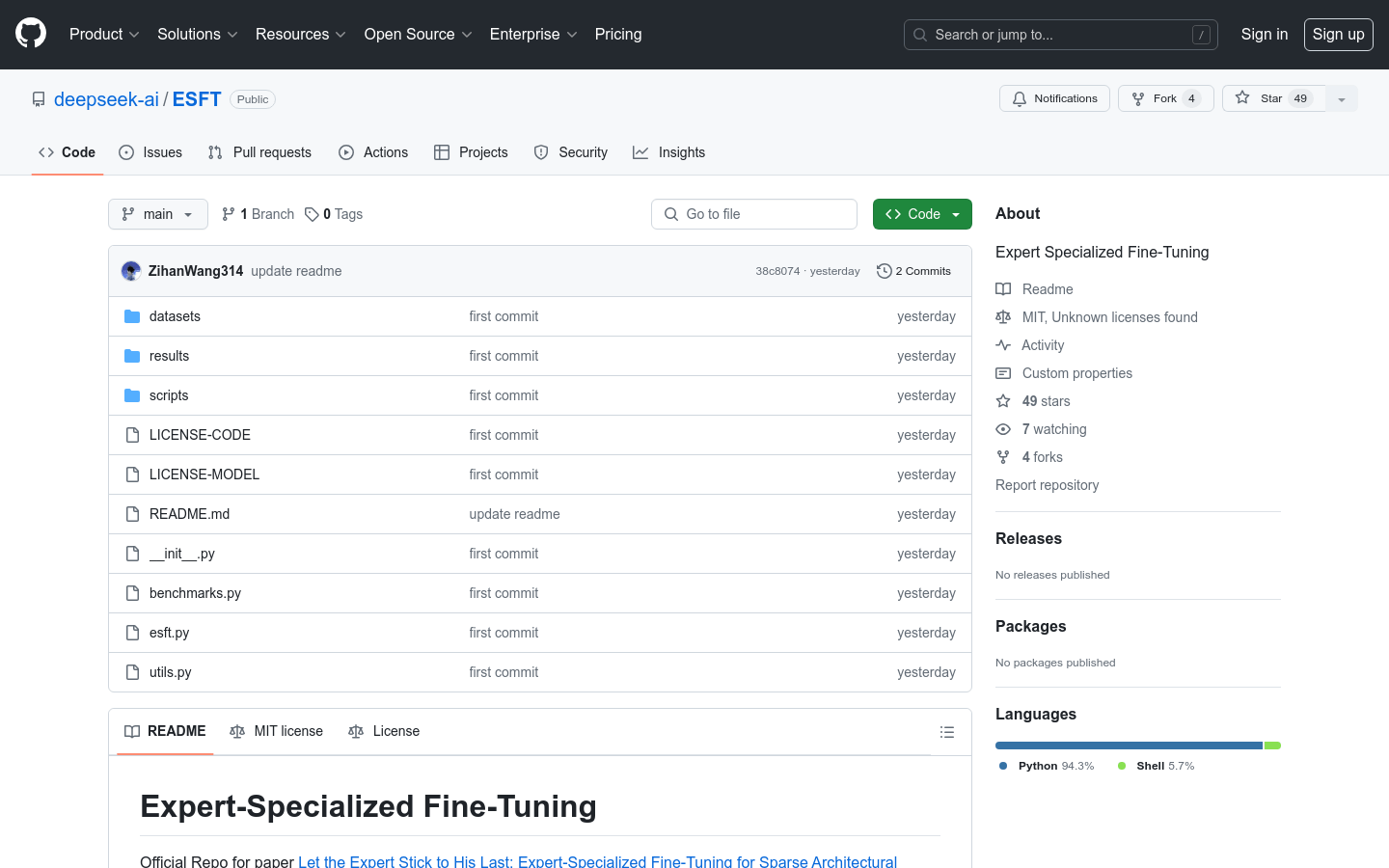

Expert Specialized Fine-Tuning

A professional fine-tuning tool for customized large-scale language models

- Install dependencies and download necessary adapters for quick startup.

- Use the eval.py script to evaluate the performance of the model on different datasets.

- Use the get_ experts _ cores. py script to calculate the score of each expert based on the evaluation dataset.

- Use the generate_excert_comfig.cy script to generate configurations for transforming MoE models trained solely on task related tasks.

Product Details

Expert Specialized Fine Tuning (ESFT) is an efficient customized fine-tuning method for large language models (LLMs) with expert blending (MoE) architecture. It optimizes model performance, improves efficiency, and reduces resource and storage usage by adjusting only the parts related to the task.