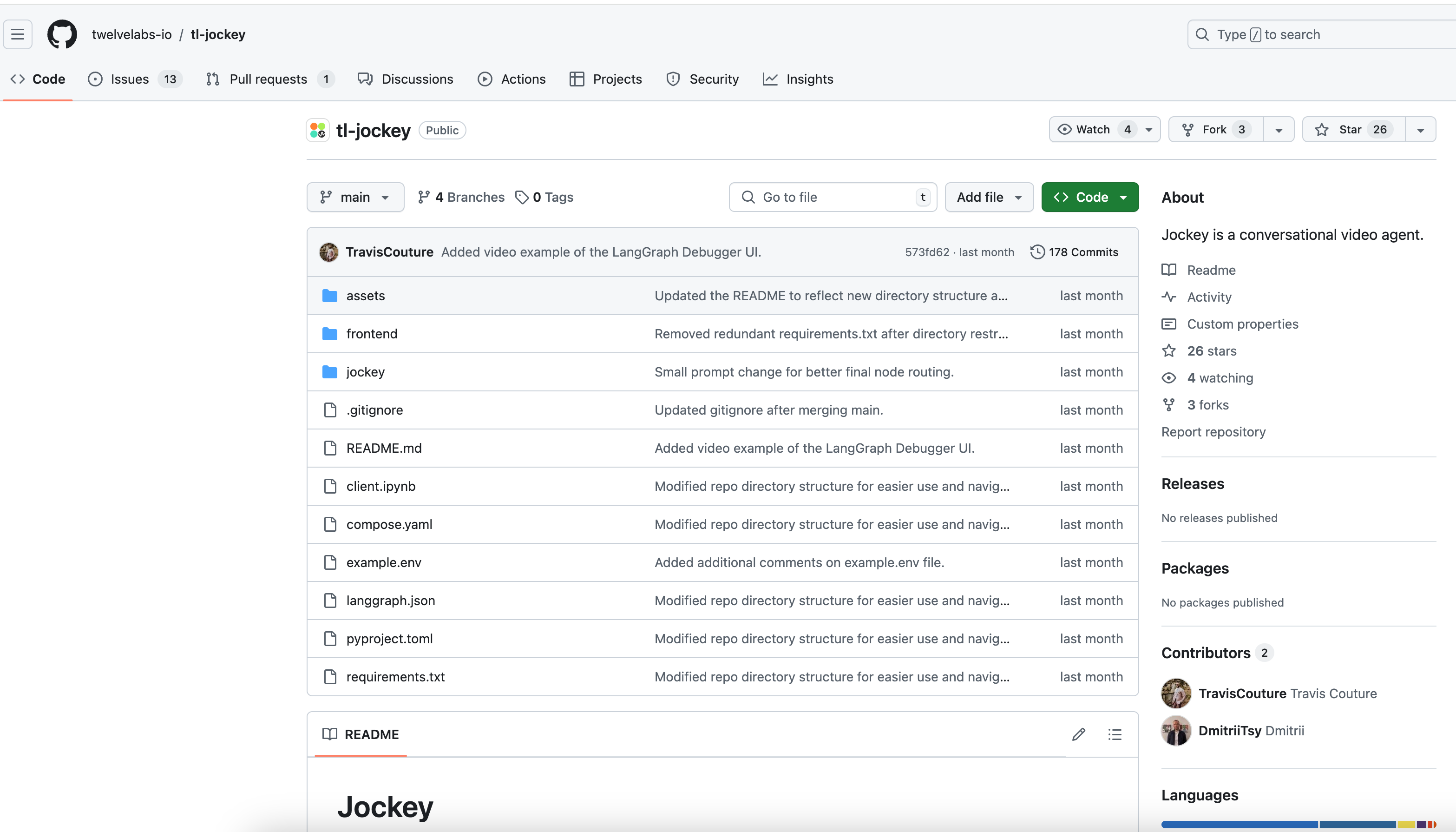

Jockey

Dialogue based video proxy, combining large language models and video processing APIs.

- Combining large-scale language models with video processing APIs for load distribution of complex video workflows.

- Use LangGraph for task allocation to improve video processing efficiency.

- Enhance user interaction experience through LLMs logical planning of execution steps.

- Without the need for intermediary representation, directly utilize the video basic model to process video tasks.

- Support customization and extension to adapt to different video related use cases.

- Provide deployment options for terminals and LangGraph API servers to flexibly adapt to development and testing needs.

Product Details

Jockey is a conversational video proxy built on the Twelve Labs API and LangGraph. It combines the capabilities of existing Large Language Models (LLMs) with Twelve Labs' API to allocate tasks through LangGraph and distribute the workload of complex video workflows to appropriate base models. LLMs are used for logical planning of execution steps and interaction with users, while video related tasks are passed to the Twelve Labs API supported by Video Foundation Models (VFMs) to process videos in a native manner, without the need for intermediary representations such as pre generated subtitles.