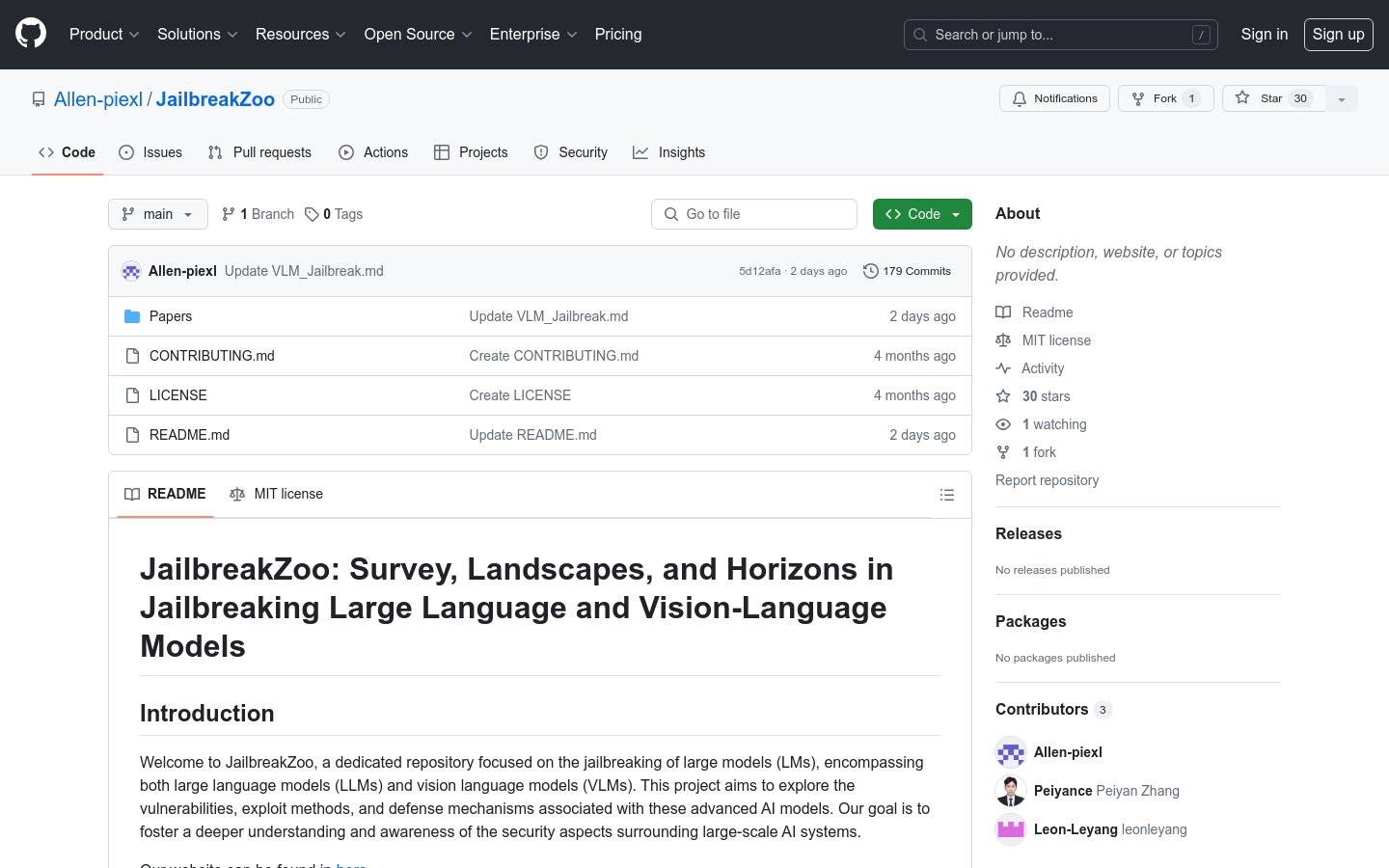

JailbreakZoo

Exploring vulnerabilities and protections in large-scale language and visual language models

- Systematically organized large-scale language model cracking techniques based on release timelines

- Provided defense strategies and methods for large language models

- Introduced the unique vulnerabilities and cracking methods of visual language models

- Contains defense mechanisms for visual language models, including the latest developments and strategies

- Encourage community contributions, including adding new research, improving documentation, or sharing cracking and defense strategies

Product Details

JailbreakZoo is a resource library dedicated to cracking large models, including large language models and visual language models. The project aims to explore the vulnerabilities, exploitation methods, and defense mechanisms of these advanced AI models, with the goal of promoting a deeper understanding and recognition of the security aspects of large-scale AI systems.