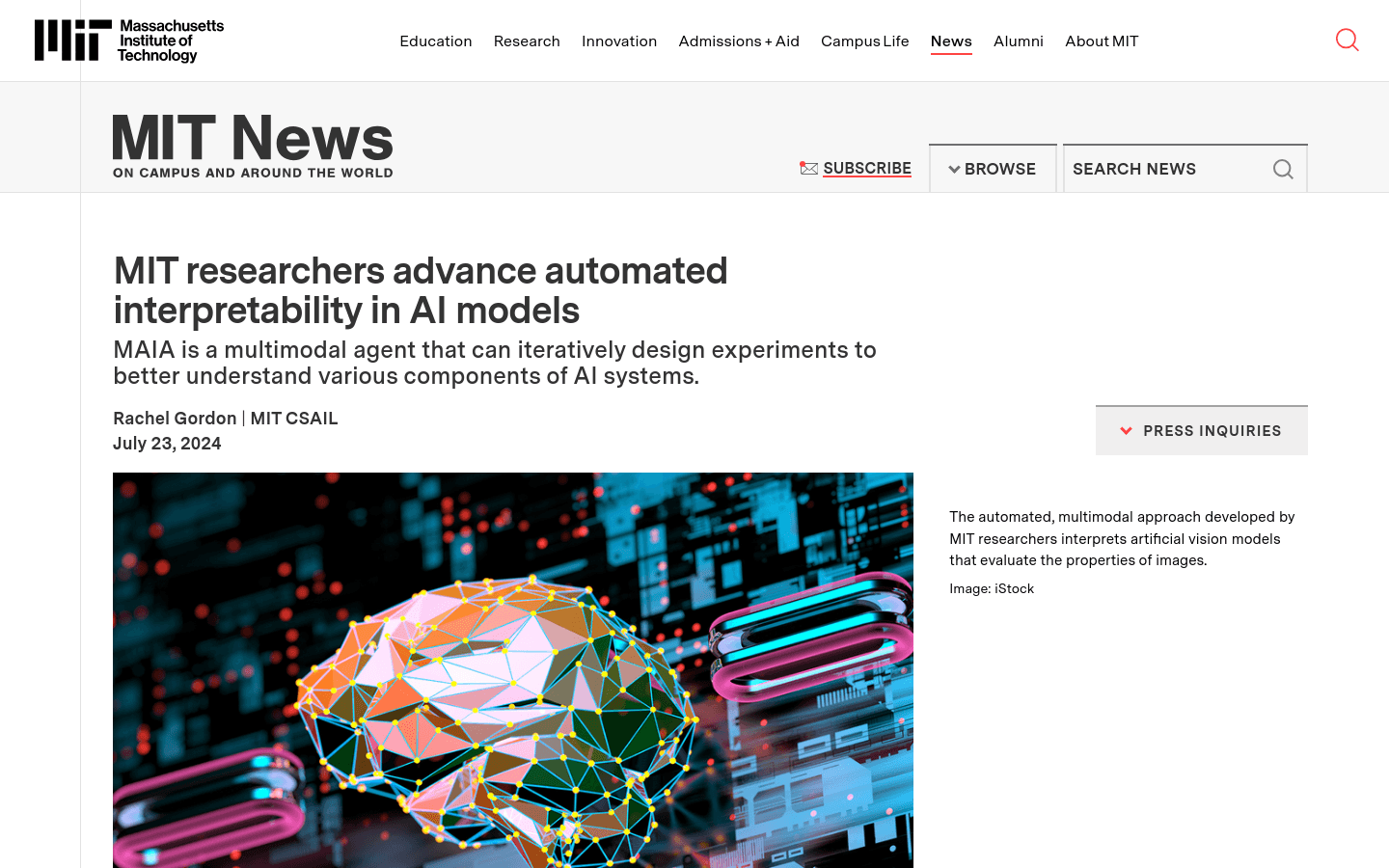

MIT MAIA

Automated explanatory agents enhance the transparency of AI models

- Automatically identify various components in the AI visual model and describe their activated visual concepts.

- Enhance the robustness of the image classifier to new situations by removing irrelevant features.

- Search for hidden biases in AI systems to help uncover potential fairness issues.

- Use tools to retrieve examples of specific datasets to maximize activation of specific neurons.

- Design experiments to test each hypothesis and validate them by generating and editing composite images.

- Evaluate the explanation of neuronal behavior and validate it through synthetic systems of known behavior and untrained AI systems.

- Continuously optimize the method through iterative analysis until a comprehensive answer can be provided.

Product Details

MAIA (Multimodal Automated Interpretability Agent) is an automated system developed by the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) to improve the interpretability of artificial intelligence models. It automates the execution of various neural network interpretive tasks through the support of visual language models and a series of experimental tools. MAIA is able to generate hypotheses, design experiments for testing, and refine its understanding through iterative analysis, providing deeper insights into the internal workings of AI models.