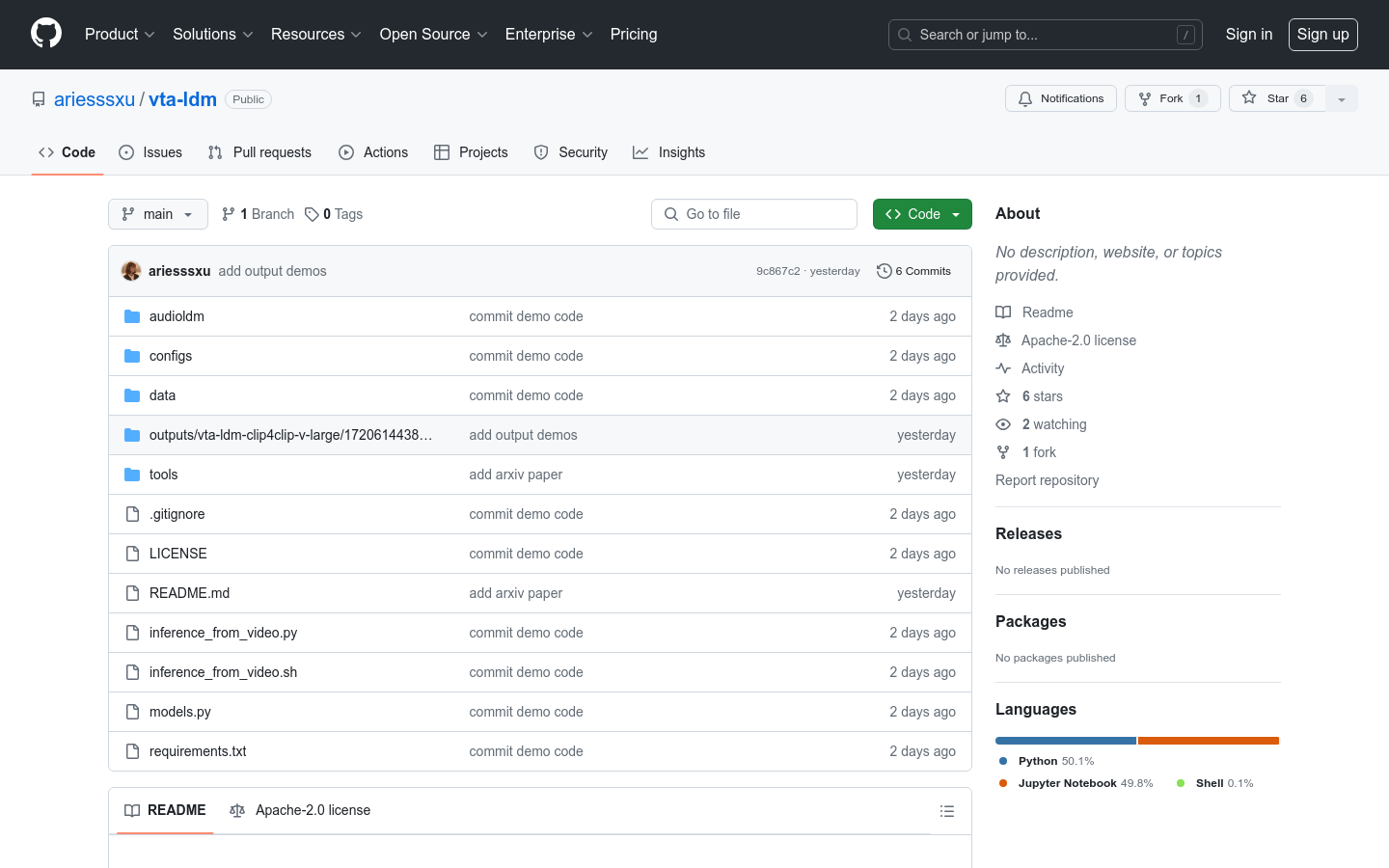

vta-ldm

Video to Audio Generation Model

- Generate semantic and time aligned audio based on video content

- Support installing Python dependencies using conda

- Provide recommended methods for downloading checkpoints from Huggingface

- Provide multiple model variants, such as VTA_LDM+IB/LB/CAVP/VIVIT, etc

- Allow users to customize hyperparameters to meet individual needs

- Provide scripts to assist in merging the generated audio with the original video

- Audio video merging function based on ffmpeg

Product Details

VTA LDM is a deep learning model that focuses on video to audio generation, capable of generating audio content that is semantically and temporally aligned with video input based on video content. It represents a new breakthrough in the field of video generation, especially after significant advances in text to video generation technology. This model was developed by Manjie Xu and others from Tencent AI Laboratory, and has the ability to generate audio that is highly consistent with video content. It has important application value in fields such as video production and audio post-processing.