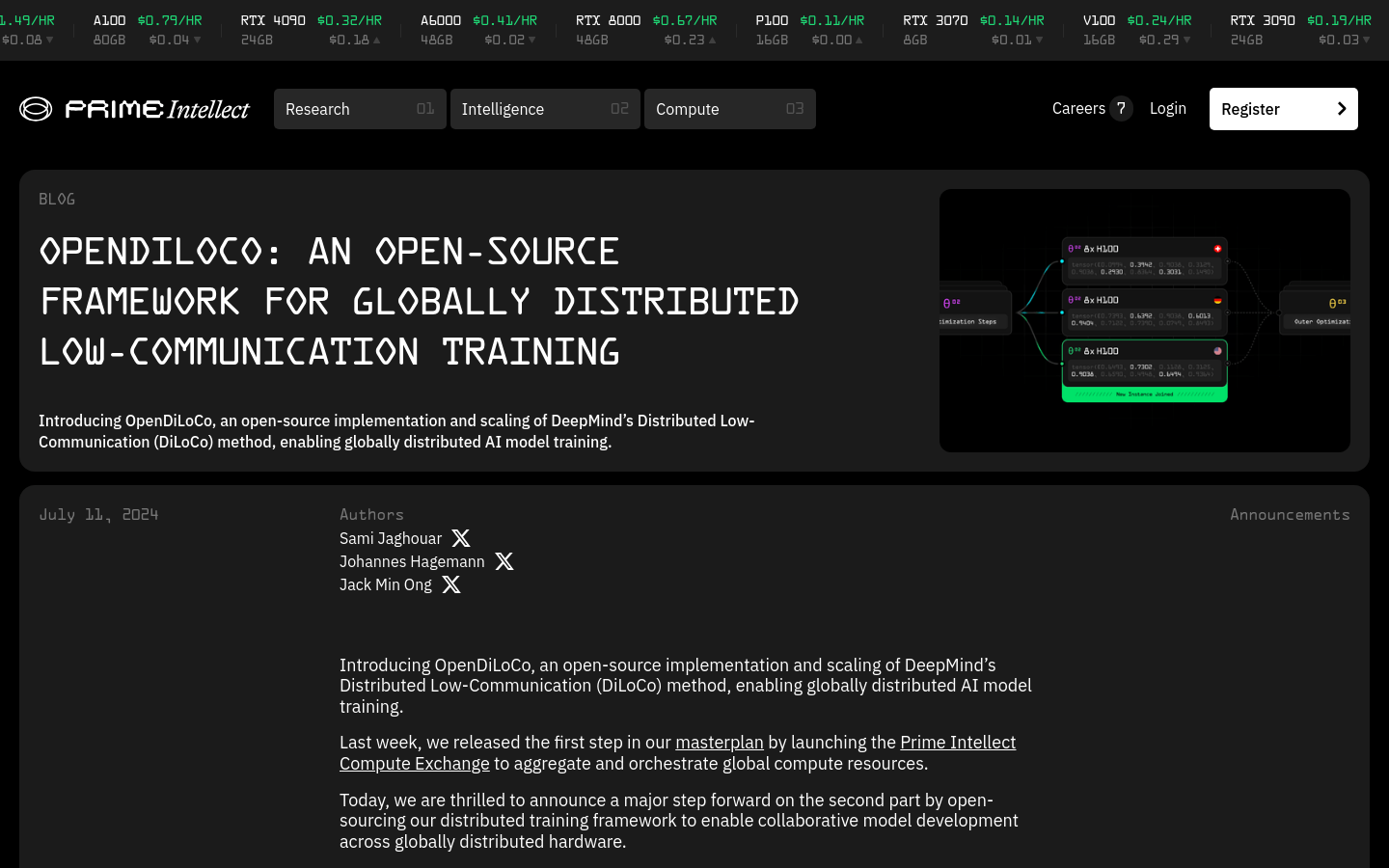

OpenDiLoCo

Open source implementation of distributed low communication AI model training

- Support distributed AI model training on a global scale.

- Implement communication and metadata synchronization between nodes through the Hivemind library.

- Implemented integration with PyTorch FSDP, supporting the expansion of a single DiLoCo working node to hundreds of machines.

- The practicality of model training was demonstrated between two continents and three countries, maintaining a computational utilization rate of 90-95%.

- Through ablation research, in-depth insights into the scalability and computational efficiency of algorithms have been provided.

- Support fault-tolerant training on different hardware settings.

- Provides the ability to add or remove resources in real-time, allowing new devices and clusters to join or exit during the training process.

Product Details

OpenDiLoCo is an open-source framework used to implement and extend DeepMind's distributed low communication (DiLoCo) approach, supporting global distributed AI model training. It provides a scalable and decentralized framework that enables efficient training of AI models in resource dispersed areas, which is of great significance for promoting the popularization and innovation of AI technology.