SEED-Story

Multimodal Long Story Generation Model

- Generate multimodal long stories: Combine text and images to create coherent stories.

- Based on user input images and text: serving as the starting point of the story.

- Support story generation with up to 25 multimodal sequences: although a maximum of 10 sequences are used during training.

- Image style and character consistency: Ensure that the generated images are consistent in style and character with the story text.

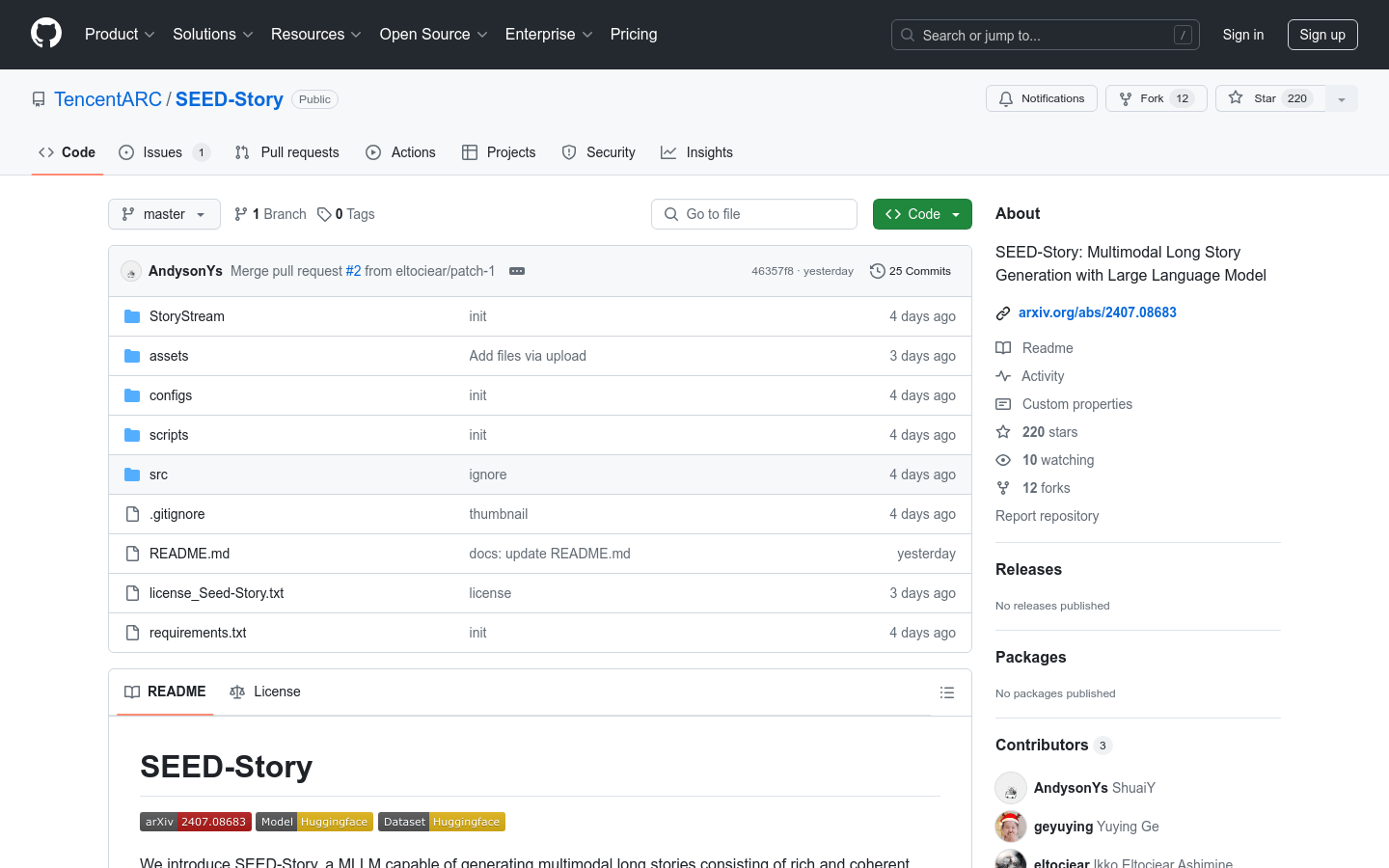

- StoryStream: a large-scale dataset designed for training and benchmarking multimodal story generation.

- Release of model weights and training code: Provide pre trained tokenizers, De tokenizers, and base model SEED-X.

- Support instruction tuning: further optimize model performance through instruction tuning.

Product Details

SEED Story is a multimodal long story generation model based on Large Language Model (MLLM), which can generate rich and coherent narrative text and style consistent images based on user provided images and text. It represents the cutting-edge technology of artificial intelligence in creative writing and visual arts, with the ability to generate high-quality, multimodal story content, providing new possibilities for the creative industry.