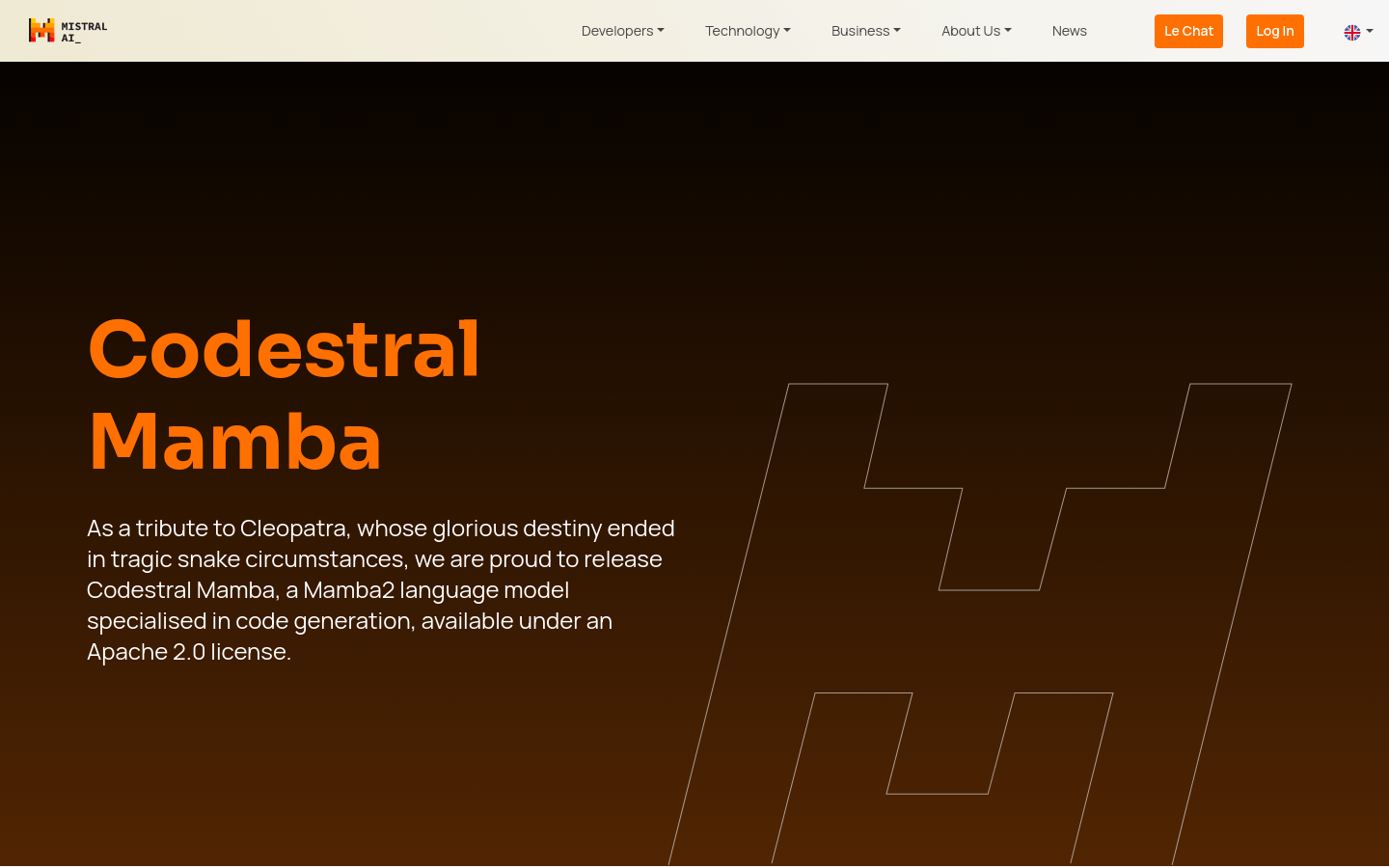

Codestral Mamba

A high-level language model focused on code generation

- Linear time reasoning, fast response to long inputs

- In theory, it can handle sequences of infinite length

- Advanced code and reasoning ability, comparable to SOTA Transformer model

- Support context retrieval capability for up to 256k tokens

- Can be deployed using the wrong reference SDK

- Support TensorRT-LLM and llama.cpp for local inference

- Free use, modification, and distribution under Apache 2.0 license

Product Details

Codestrand Mamba is a language model developed by the Mistral AI team that focuses on code generation. It is based on the Mamba2 architecture and has the advantages of linear time reasoning and the ability to theoretically model infinite sequences. This model has undergone professional training and possesses advanced code and reasoning abilities, which can be comparable to the most advanced Transformer based models currently available.