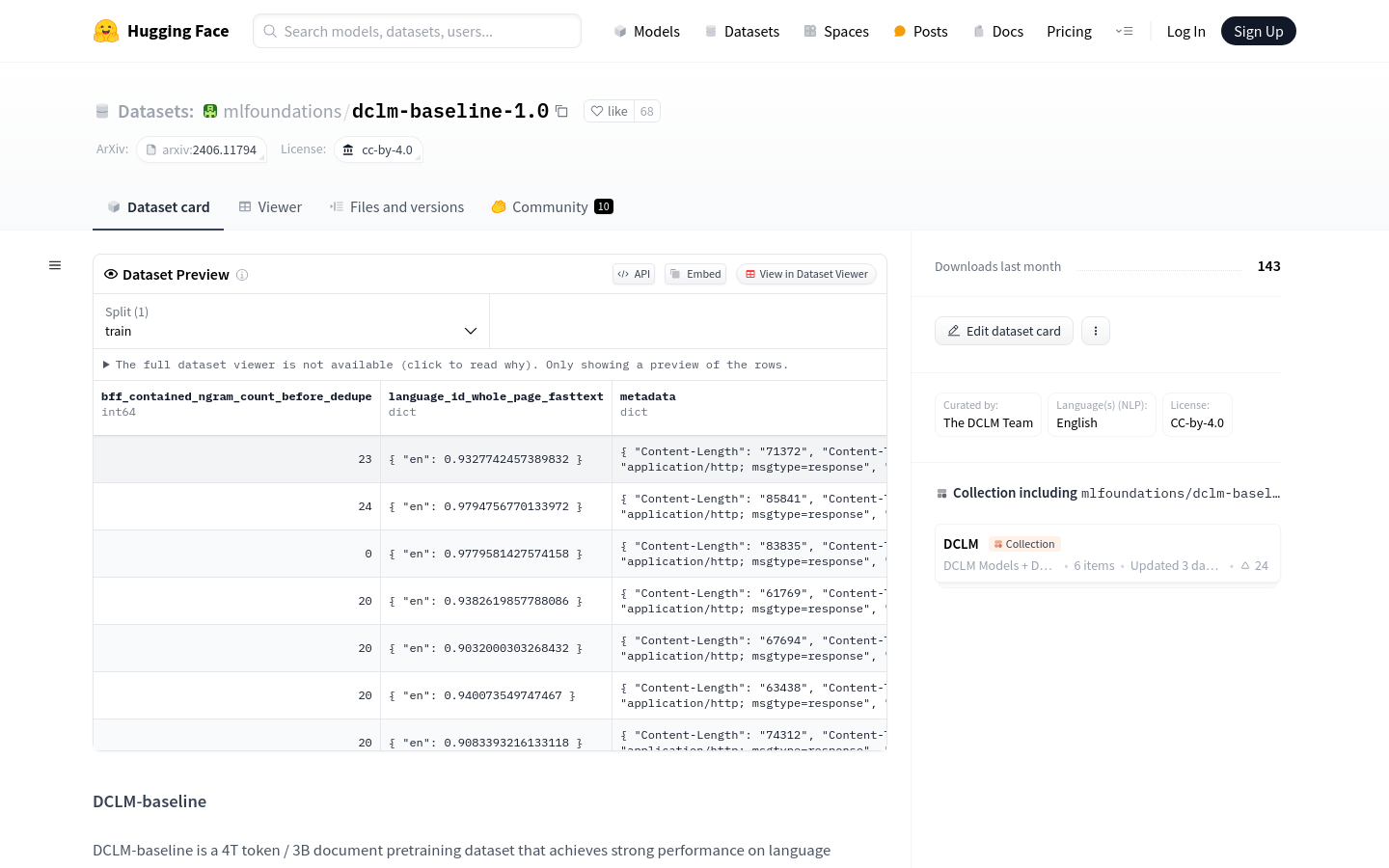

DCLM-baseline

High performance language model benchmark dataset

- High performance dataset for language model benchmark testing

- Contains a large number of tokens and documentation, suitable for large-scale training

- After cleaning, filtering, and deduplication, ensure data quality

- Provides a benchmark for studying the performance of language models

- Not suitable for model training in production environments or specific fields

- Helps researchers understand the impact of data planning on model performance

- Promoted the research and development of efficient language models

Product Details

DCLM baseline is a pre trained dataset for language model benchmarking, consisting of 4T tokens and 3B documents. It extracts data from the Common Crawl dataset through carefully planned data cleaning, filtering, and deduplication steps, aiming to demonstrate the importance of data planning in training efficient language models. This dataset is for research purposes only and is not suitable for model training in production environments or specific fields such as code and mathematics.