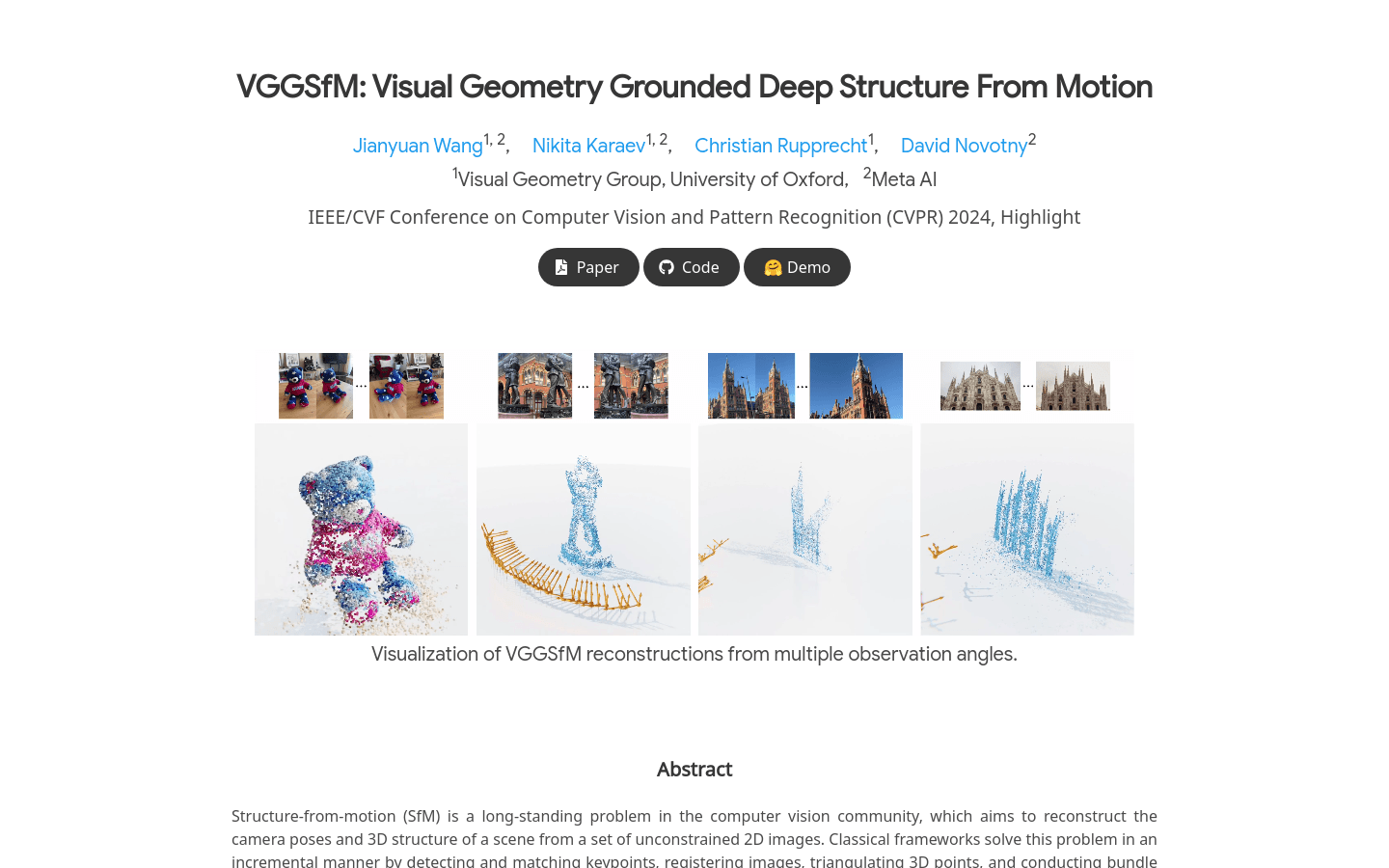

VGGSfM

Deep Learning Driven 3D Reconstruction Technology

- Extract 2D trajectories from input images

- Reconstructing a camera using image and trajectory features

- Initialize point cloud based on these trajectories and camera parameters

- Apply bundled adjustment layers for reconstruction and refinement

- Fully differentiable framework design

- Reconstruct photos in field applications and display estimated point clouds and cameras

- Qualitative visualization of camera and point cloud reconstruction on Co3D and IMC Phototourism

- In each row, the leftmost frame contains the query image and query point, and the predicted trajectory point is displayed on the right side

Product Details

VGGSfM is a deep learning based 3D reconstruction technique aimed at reconstructing the camera pose and 3D structure of a scene from an unrestricted set of 2D images. This technology achieves end-to-end training through a fully differentiable deep learning framework. It utilizes deep 2D point tracking technology to extract reliable pixel level trajectories, while restoring all cameras based on image and trajectory features, and optimizing cameras and triangulating 3D points through differentiable bundling adjustment layers. VGGSfM has achieved state-of-the-art performance on three popular datasets: CO3D, IMC Phototourism, and ETH3D.