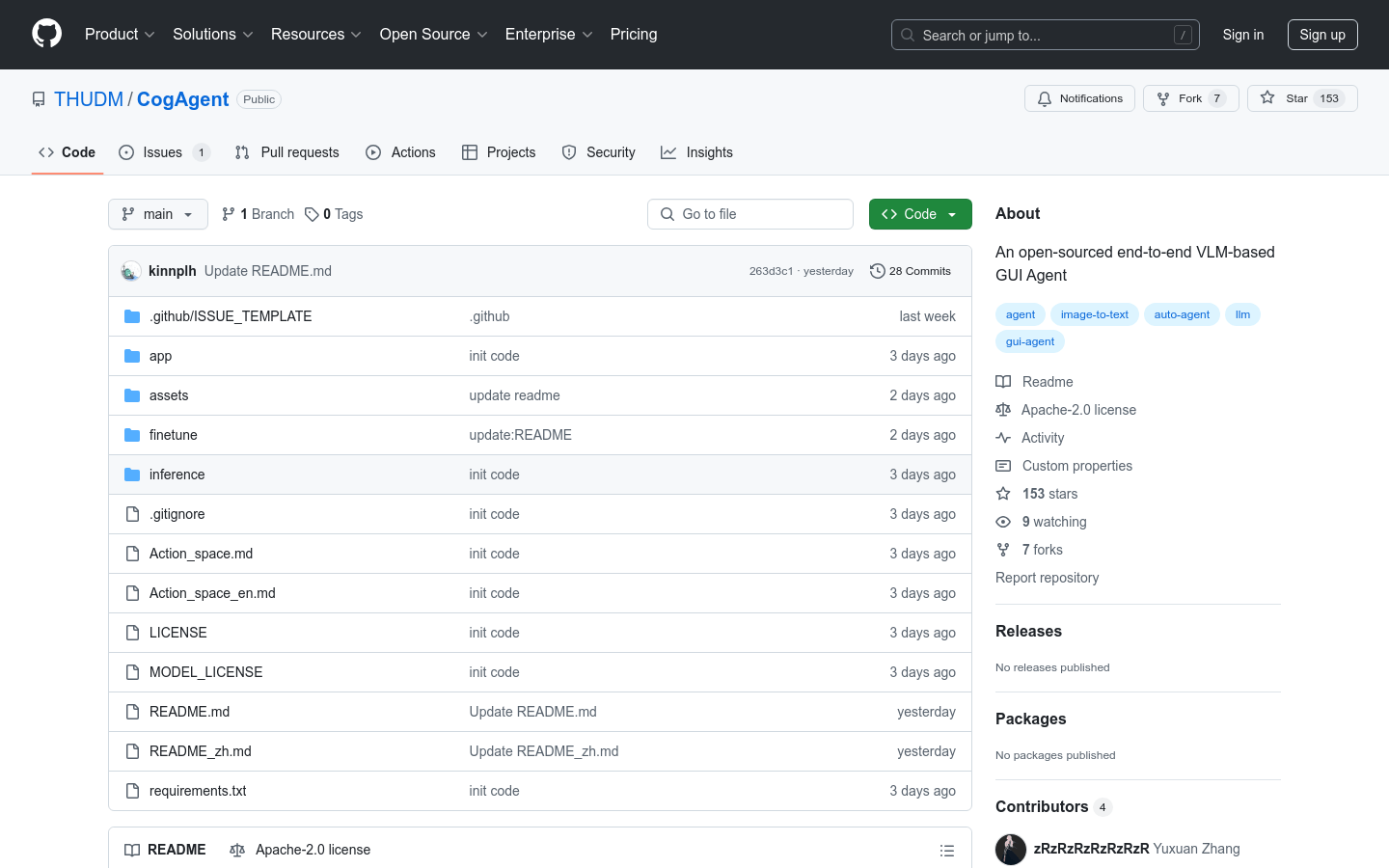

CogAgent

Open source end-to-end visual language model (VLM) based GUI proxy

0

- Support bilingual (Chinese and English) cloud communication, enabling interaction through screenshots and natural language.

- It has significant advantages in GUI perception, inference prediction accuracy, operational space integrity, and task generalization.

- The CogAgent-9B-20241220 model is based on GLM-4V-9B, a bilingual open-source VLM foundation model.

- Support multi-stage training and strategy improvement to achieve accuracy in GUI perception and inference prediction.

- The model output follows a strict format and is returned in string format. JSON output is not supported.

- Not supporting continuous dialogue, but supporting continuous execution history.

- Images are required as input, and pure text dialogue cannot achieve GUI proxy tasks.

Product Details

CogAgent is a GUI agent based on Visual Language Model (VLM), which achieves bilingual (Chinese and English) communication through screenshots and natural language. CogAgent has made significant progress in GUI perception, inference and prediction accuracy, operational space integrity, and task generalization. This model has been applied in the GLM-PC product of ZhipuAI, aiming to assist researchers and developers in advancing the research and application of GUI agents based on visual language models.