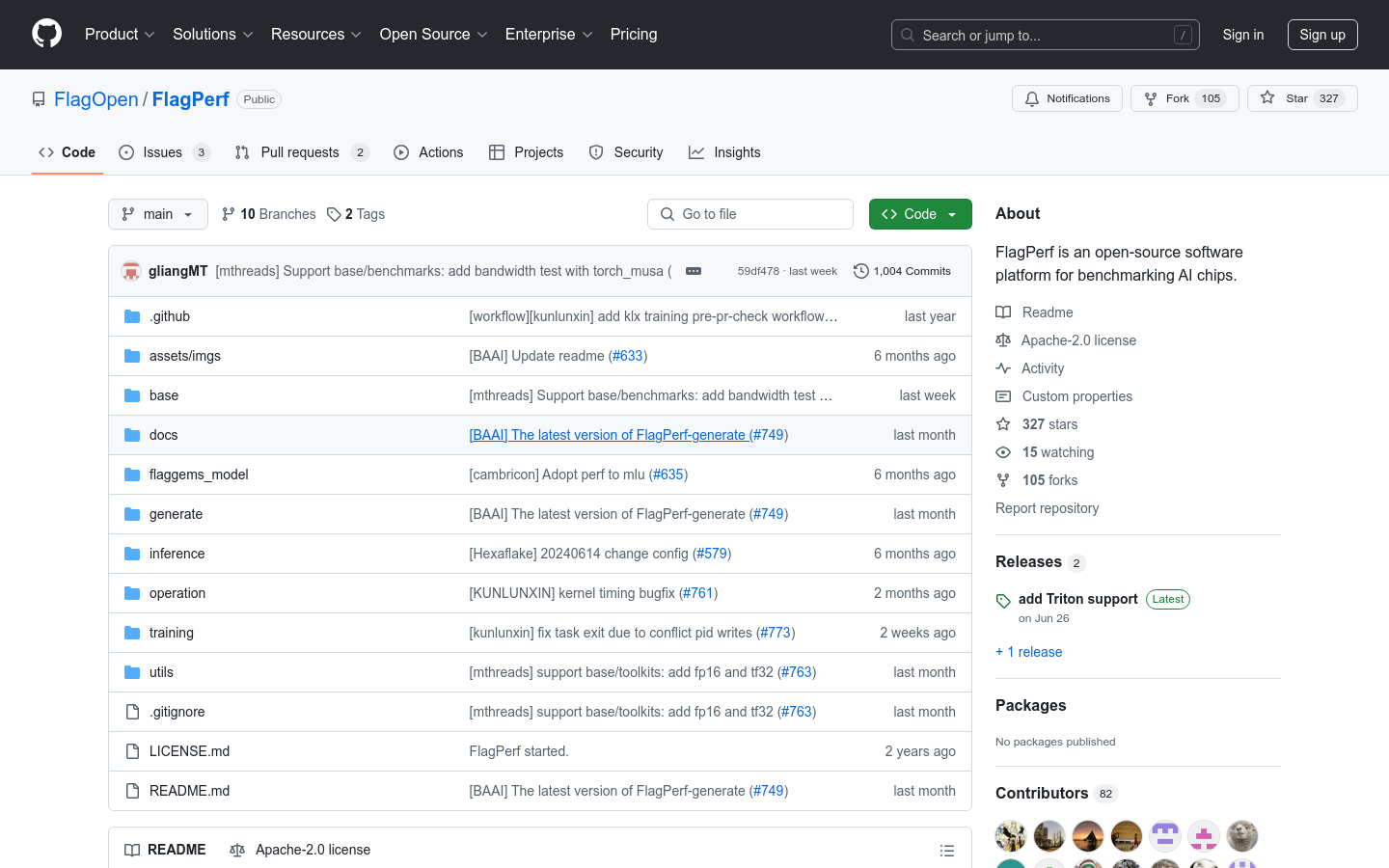

FlagPerf

Open source AI chip performance benchmark testing platform

0

- Build a multidimensional evaluation index system, including performance indicators, resource utilization indicators, and ecological adaptation capability indicators.

- Supporting diverse scenarios and tasks, covering over 30 classic models in fields such as computer vision and natural language processing.

- Support multiple training frameworks and inference engines, such as PyTorch TensorFlow, And cooperate with domestic frameworks such as PaddlePaddle and MindSpore.

- Support multiple testing environments to comprehensively evaluate the performance of single card, single machine, and multiple machines.

- Strictly review the code for evaluation to ensure fairness in the testing process and results.

- Open source all testing code to ensure reproducibility of the testing process and data.

Product Details

FlagPerf is an integrated AI hardware evaluation engine jointly built by Zhiyuan Research Institute and AI hardware manufacturers, aiming to establish an industry practice oriented indicator system to evaluate the actual capabilities of AI hardware under software stack combination (model+framework+compiler). This platform supports a multi-dimensional evaluation index system, covering large model training and inference scenarios, and supports multiple training frameworks and inference engines, connecting AI hardware and software ecosystems.