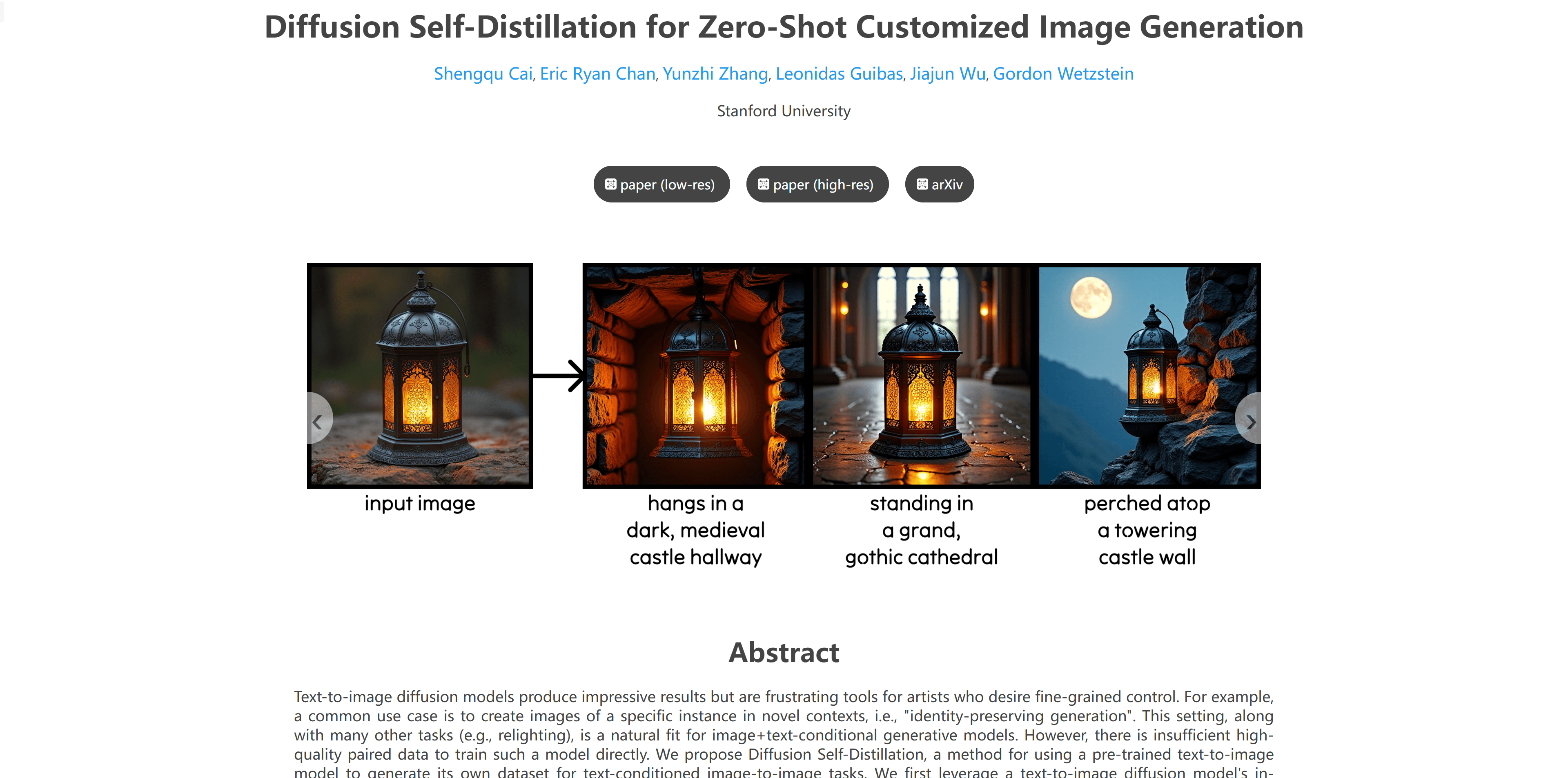

Diffusion Self-Distillatio

A diffusion self distillation technique for zero sample customized image generation

0

- -Zero sample custom image generation: Generate images of specific instances in a new context without the need for a large amount of paired data.

- -Text to Image Diffusion Model: Generate image grids using pre trained models and collaborate with visual language models to filter paired datasets.

- -Image to image task fine-tuning: Fine tune the text to image model to text plus image to image model to improve the quality and consistency of generated images.

- -Identity preservation generation: Maintaining the identity characteristics of specific instances (such as people or items) in different scenarios.

- -Automated data filtering: Using visual language models to automatically filter and classify image pairs, simulating the process of manual annotation and filtering.

- -Information exchange: The model generates two frames of images, one frame reconstructs the input image, and the other frame is the edited output, achieving effective information exchange.

- -Optimization without testing: Compared to traditional per instance tuning techniques, this technique does not require optimization during testing.

Product Details

Diffusion Self Distillation is a diffusion model-based self distillation technique used for zero sample customized image generation. This technology allows artists and users to generate their own datasets through pre trained text to image models without a large amount of paired data, and then fine tune the models to achieve image to image tasks with text and image conditions. This method outperforms existing zero sample methods in maintaining the performance of identity generation tasks and is comparable to tuning techniques for each instance, optimizing without the need for testing.