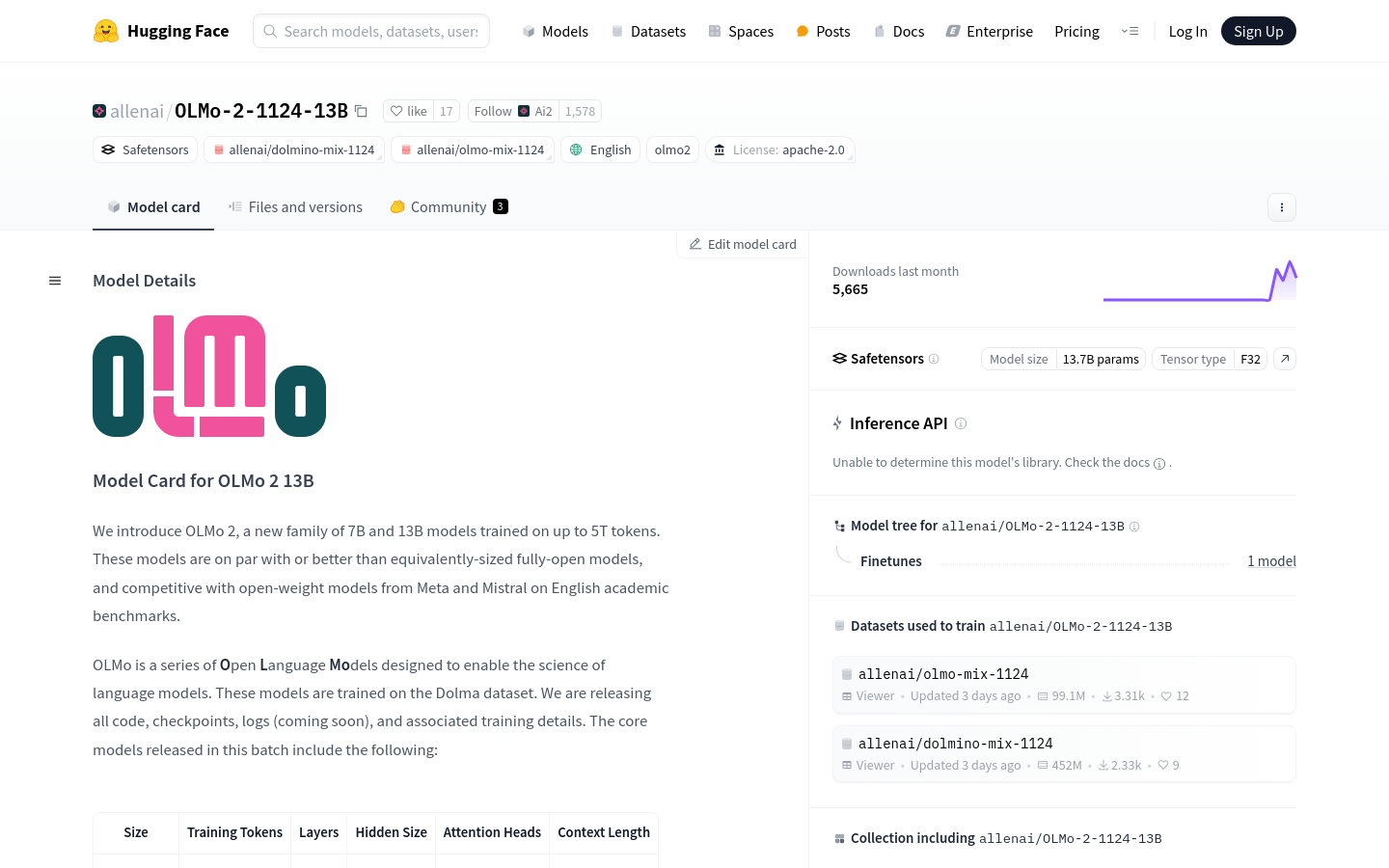

OLMo 2 13B

A high-performance English academic benchmark language model

0

- Supports up to 4096 context lengths, suitable for long text processing.

- The model has been trained with 5 trillion tokens and has strong language understanding and generation capabilities.

- Provide multiple fine tuning options, including SFT, DPO, and PPO.

- The model supports quantification to improve inference speed and reduce resource consumption.

- It can be easily integrated and used through HuggingFace's Transformers library.

- The model has performed well in multiple English academic benchmark tests, such as ARC/C, HSwag, WinoG, etc.

Product Details

OLMo 2-13B is a Transformer based autoregressive language model developed by Allen Institute for AI (Ai2), focusing on English academic benchmark testing. The model used up to 5 trillion tokens during the training process, demonstrating comparable or superior performance to fully open models of the same scale, and competing with Meta and Mistral's open weight models on English academic benchmarks. The release of OLMo 2-13B includes all code, checkpoints, logs, and related training details, aimed at promoting scientific research on language models.