text-to-pose

A model for generating poses based on text and further generating images

0

- Text to pose transformation: Using Transformer architecture to transform text descriptions into character poses.

- Pose to image generation: Based on the generated pose, high-quality images are generated through a diffusion model.

- Model Training and Optimization: Provides training code and pre trained models for easy use by researchers and developers.

- Dataset creation: Provides datasets for training and testing, including COCO-2017 annotated dataset.

- Model comparison: displays the postures and images generated using different models for easy comparison of effects.

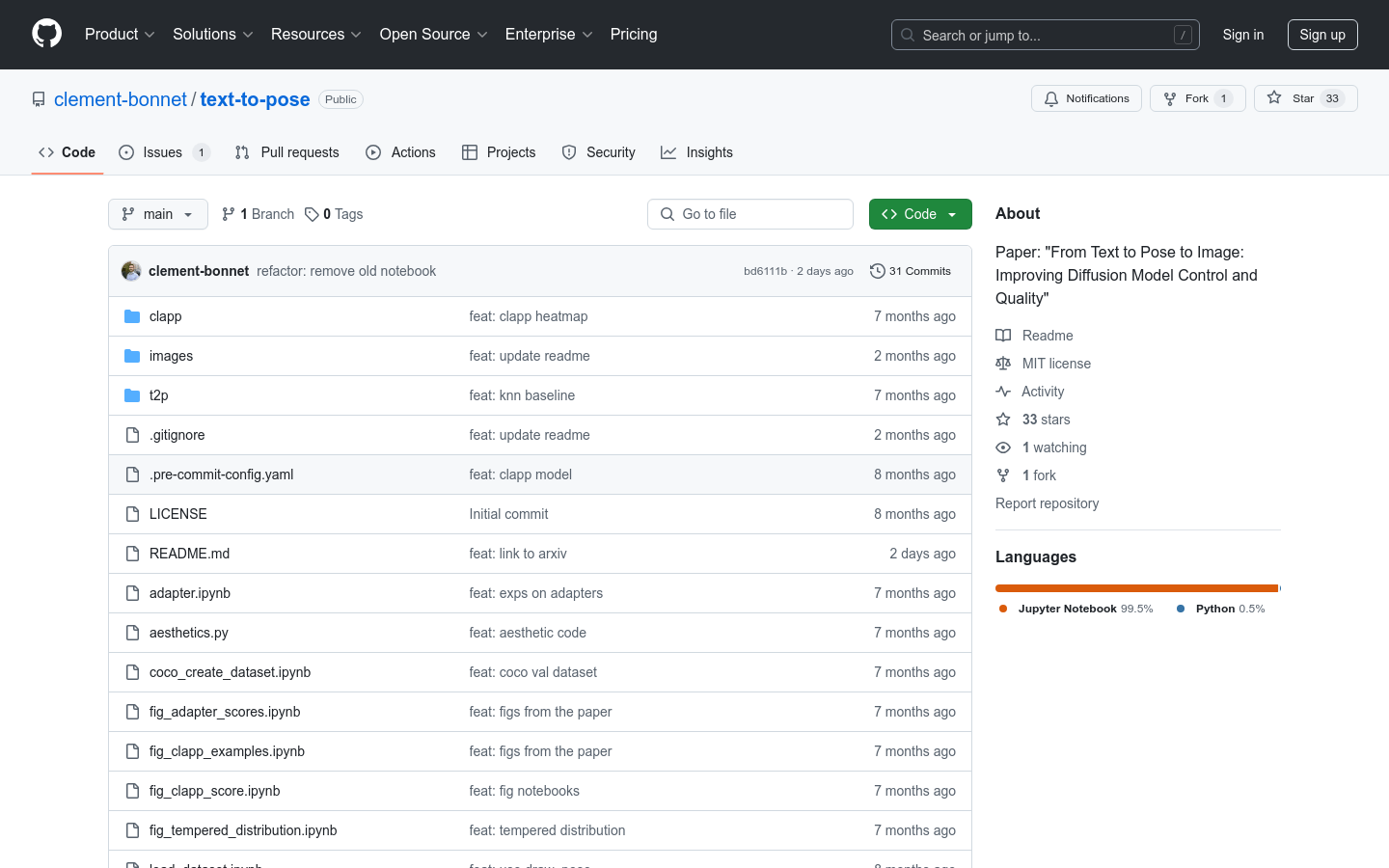

- Code and documentation: Provides detailed code and documentation for users to understand and use.

Product Details

Text to pose is a research project aimed at generating character poses through text descriptions and using these poses to generate images. This technology combines natural language processing and computer vision, and achieves text to image generation by improving the control and quality of diffusion models. The project background is based on the papers published in NeurIPS 2024 Workshop, which are innovative and cutting-edge. The main advantages of this technology include improving the accuracy and controllability of image generation, as well as its potential applications in fields such as art creation and virtual reality.