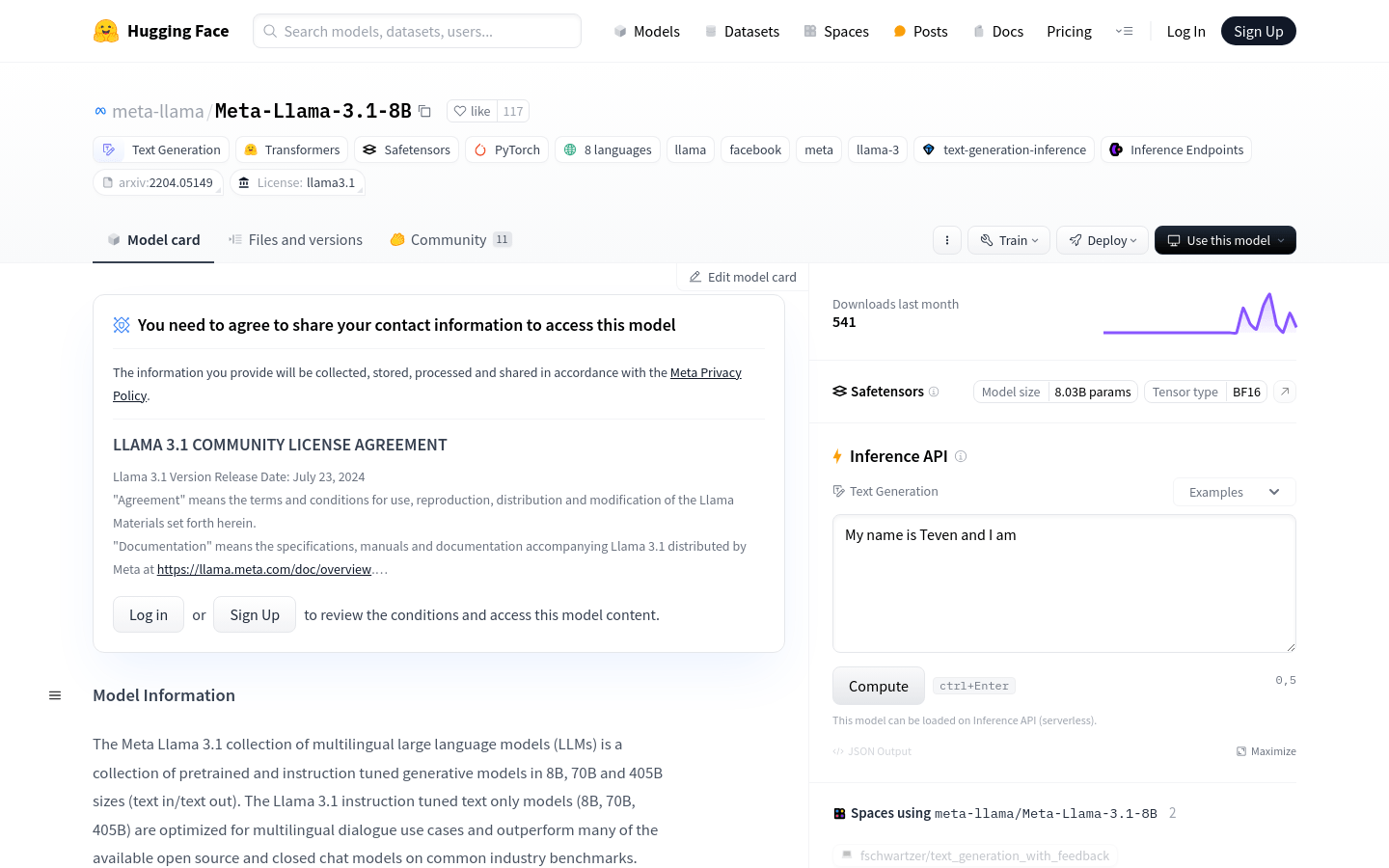

Meta-Llama-3.1-8B

A large-scale multilingual generation model with 8B parameters

- Supports text generation and dialogue capabilities in 8 languages

- Using optimized Transformer architecture to improve model performance

- Training through supervised fine-tuning and reinforcement learning combined with human feedback to conform to human preferences

- Support multilingual input and output, enhance the model's multilingual capability

- Provide static models and instruction adjusted models to adapt to different natural language generation tasks

- Support the use of model outputs to improve other models, including synthetic data generation and model distillation

Product Details

Meta Llama 3.1 is a series of pre trained and instruction adjusted multilingual large language models (LLMs), including versions of 8B, 70B, and 405B sizes, supporting 8 languages, optimized for multilingual dialogue use cases, and performing excellently in industry benchmark tests. The Llama 3.1 model adopts an autoregressive language model, utilizes an optimized Transformer architecture, and improves the usefulness and security of the model through supervised fine-tuning (SFT) and reinforcement learning combined with human feedback (RLHF).