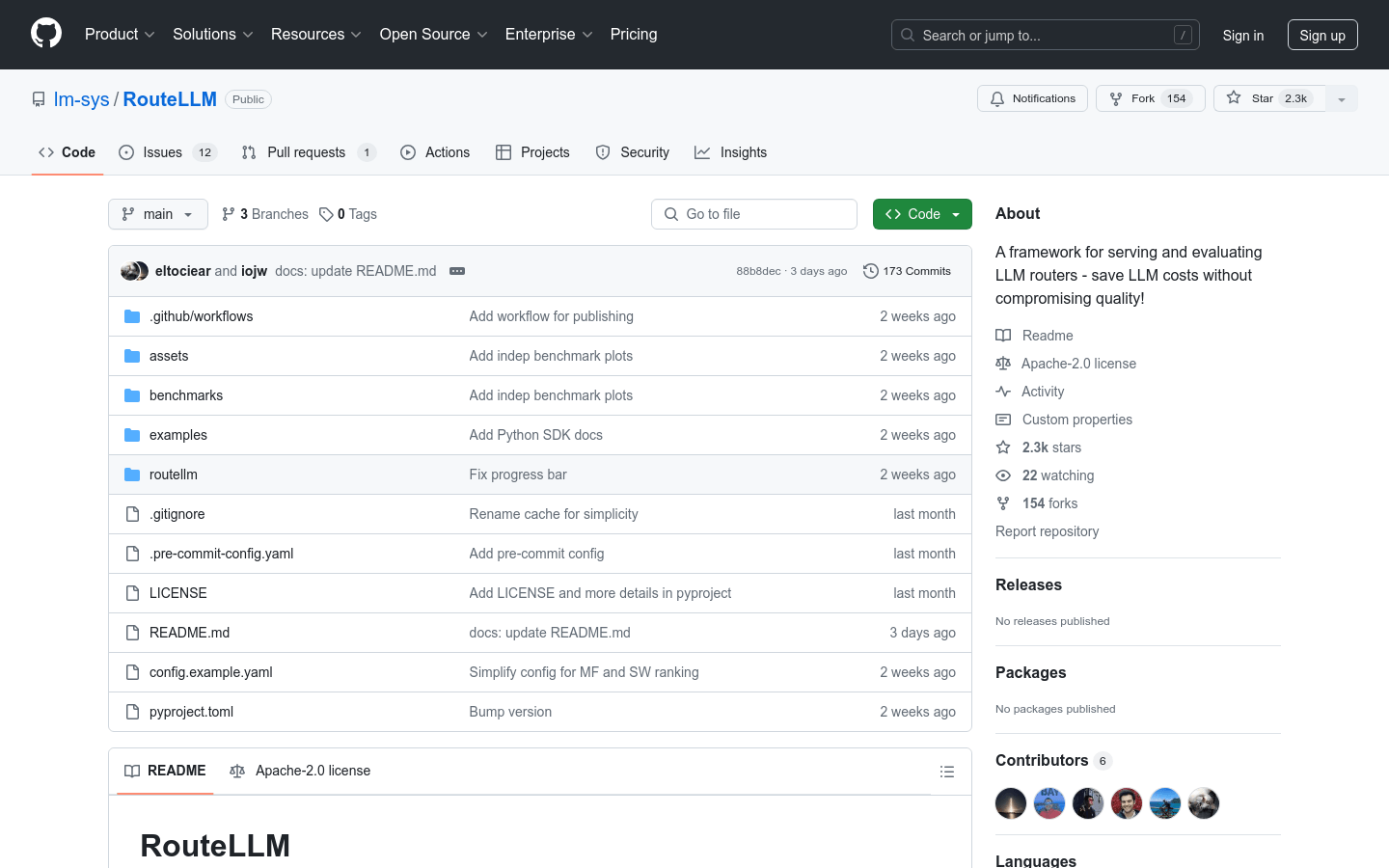

RouteLLM

A framework that saves LLM costs without sacrificing quality

- As an alternative to OpenAI clients, intelligent routing can easily query models with lower costs.

- Provide trained routers to reduce costs while maintaining performance.

- Support expanding new routers and comparing the performance of different routers through configuration files or parameters.

- Support local model routing and OpenAI compatible server startup.

- Provides threshold calibration function to optimize the balance between cost and quality.

- Contains an evaluation framework for measuring the performance of different routing strategies in benchmark tests.

Product Details

RouteLLM is a framework for serving and evaluating Large Language Model (LLM) routers. It queries models with different costs and performance through intelligent routing to save costs while maintaining response quality. It provides an out of the box router and has shown up to 85% cost reduction and 95% GPT-4 performance in widely used benchmark tests.