Llama3

Large language model, supporting multiple parameter scales

- Support multiple pre trained models with parameter scales ranging from 8B to 70B

- Provide instruction tuning function to improve the performance of the model on specific tasks

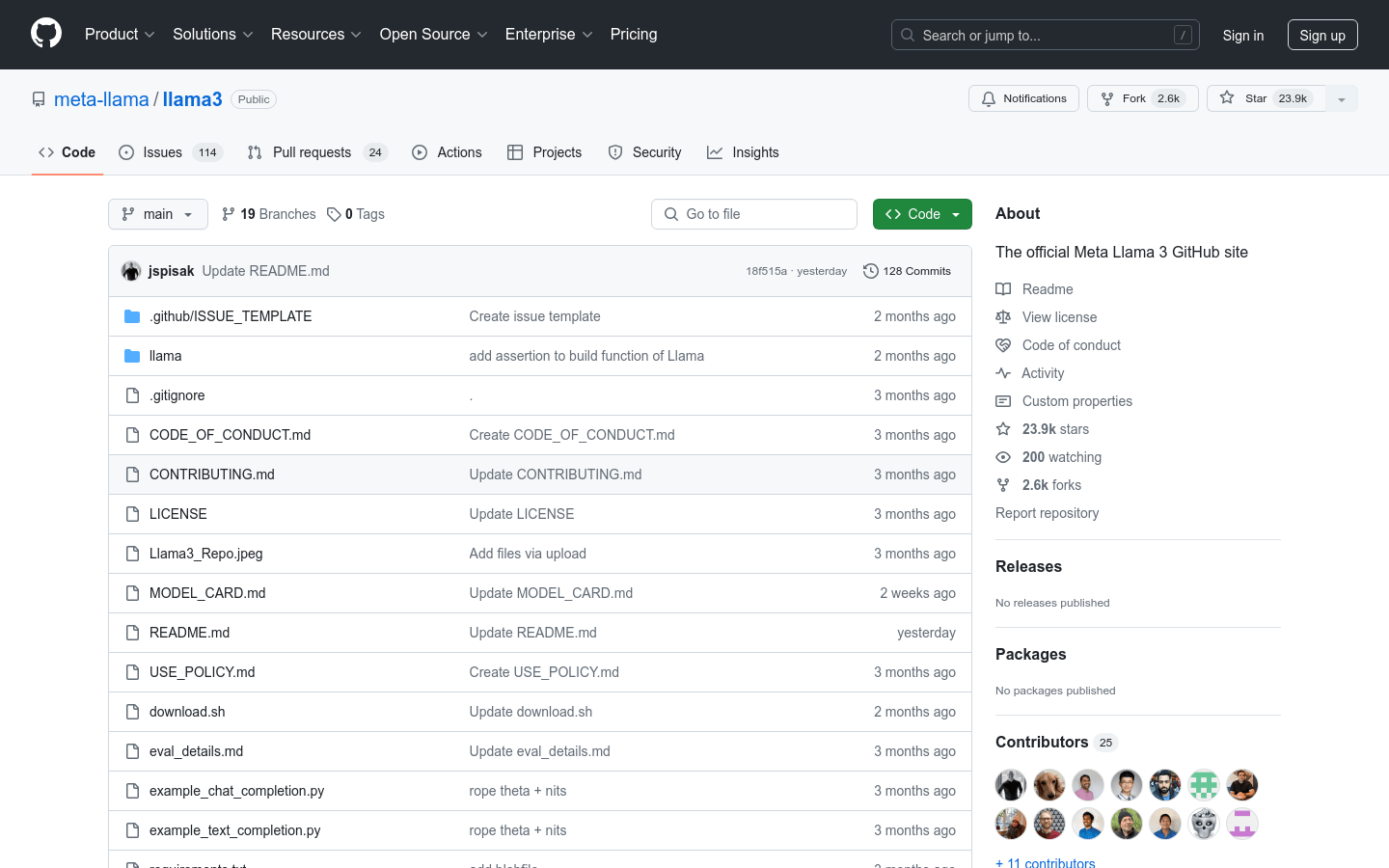

- Provide model code and weight downloads through GitHub repository

- Support local inference, users can run models on their own devices

- Provide detailed user guides and example code to facilitate users to quickly get started

- Model Support Model Parallelism (MP) technology to adapt to different hardware configurations

- The model supports sequence lengths of up to 8192 tokens, meeting the requirements of complex tasks

Product Details

Meta Llama 3 is the latest large-scale language model launched by Meta, aimed at unlocking the capabilities of large-scale language models for individuals, creators, researchers, and various businesses. This model includes different scale versions with parameters ranging from 8B to 70B, supporting pre training and instruction tuning. The model is provided through the GitHub repository, and users can perform local inference by downloading model weights and tokenizers. The release of Meta Llama 3 marks the further popularization and application of large-scale language modeling technology, with broad research and commercial potential.